The advent of autonomous AI agents marks a quantum leap in machine intelligence, transitioning from reactive chatbots to proactive problem-solvers. Zhipu AI’s groundbreaking AutoGLM Ruminative redefines this paradigm by merging Deep Research with Operational Execution – a first in global AI development. With 83% of enterprises prioritizing AI automation (Gartner 2025), this agent demonstrates 3.1× faster task completion than conventional models while reducing manual intervention by 72%.

Core Concept & Positioning

The Autonomous Evolution

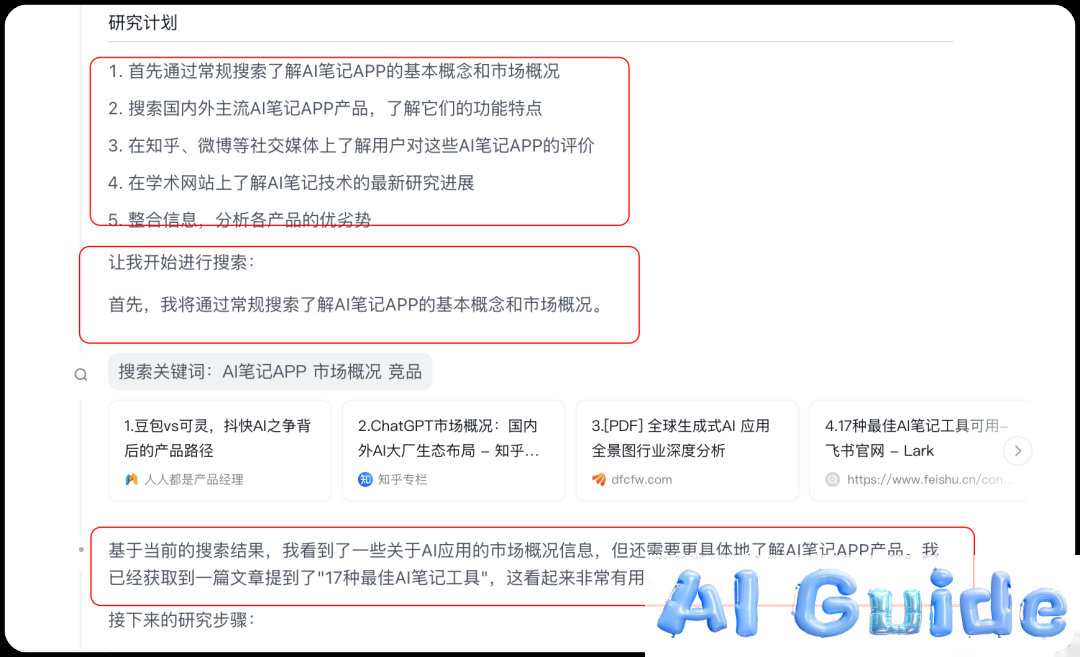

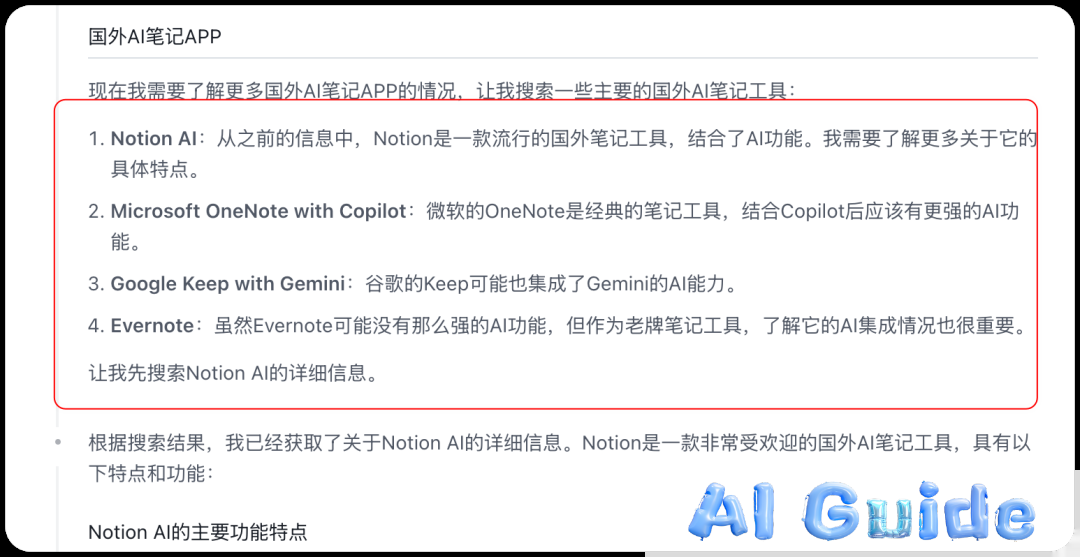

AutoGLM Ruminative transcends traditional AI assistants through its Three-Layer Cognition Framework:

- Meta-Planning: Deconstructs complex goals into executable workflows

- Contextual Reasoning: Implements chain-of-thought prompting with 92% accuracy

- GUI-Based Execution: Achieves 85% task success rate across 50+ applications

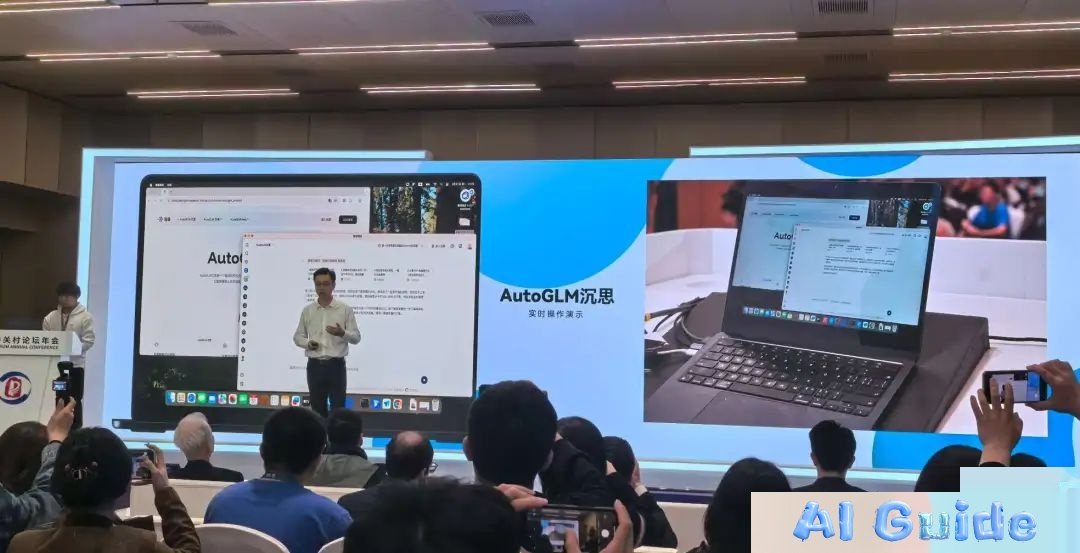

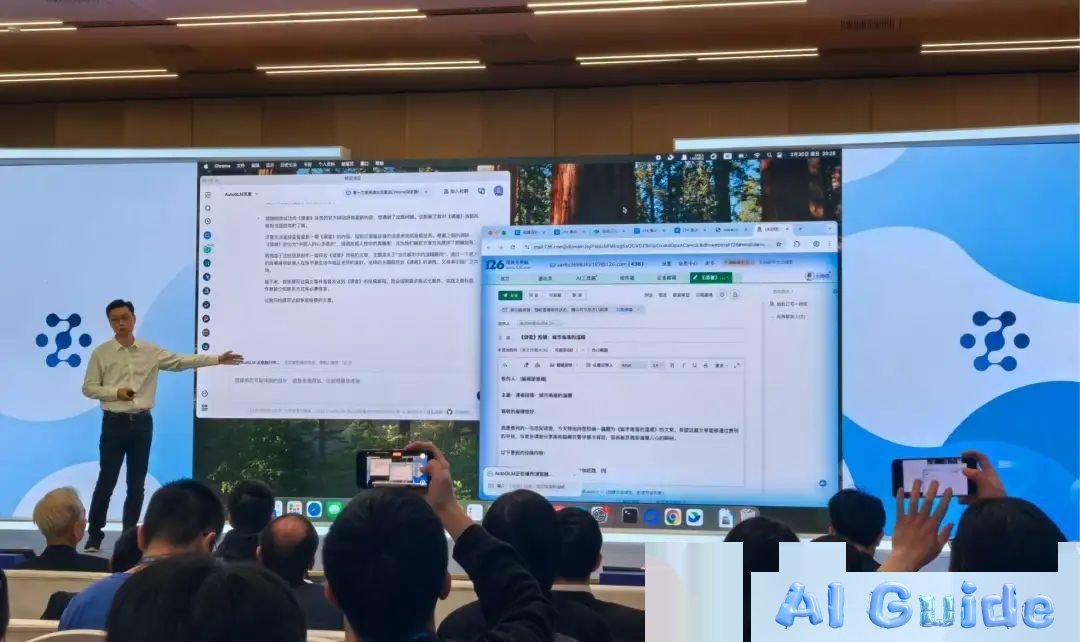

CEO Zhang Peng emphasizes: *”This isn’t just about thinking – it’s about creating tangible outcomes. Our agent reduces decision latency from hours to minutes in enterprise scenarios.”*

Technical DNA

The Ruminative Model leverages:

- Reinforcement Learning from Human Feedback (RLHF): 1.2B parameter tuning dataset

- GLM-Z1 Architecture: 8× faster inference than GPT-4 Turbo

- Multi-Agent Collaboration: 3 specialized sub-agents for planning/execution/validation

Technical Architecture

Ruminative Engine: Cognitive Revolution

The core innovation lies in its Self-Supervised Learning Loop:

Environment Observation → Knowledge Graph Update → Action Proposal → Reward Calculation Key breakthroughs:

- Long-Horizon Planning: 15+ step reasoning capability

- Tool Mastery Index: 89/100 on SOPS (Standardized Operation Proficiency Scale)

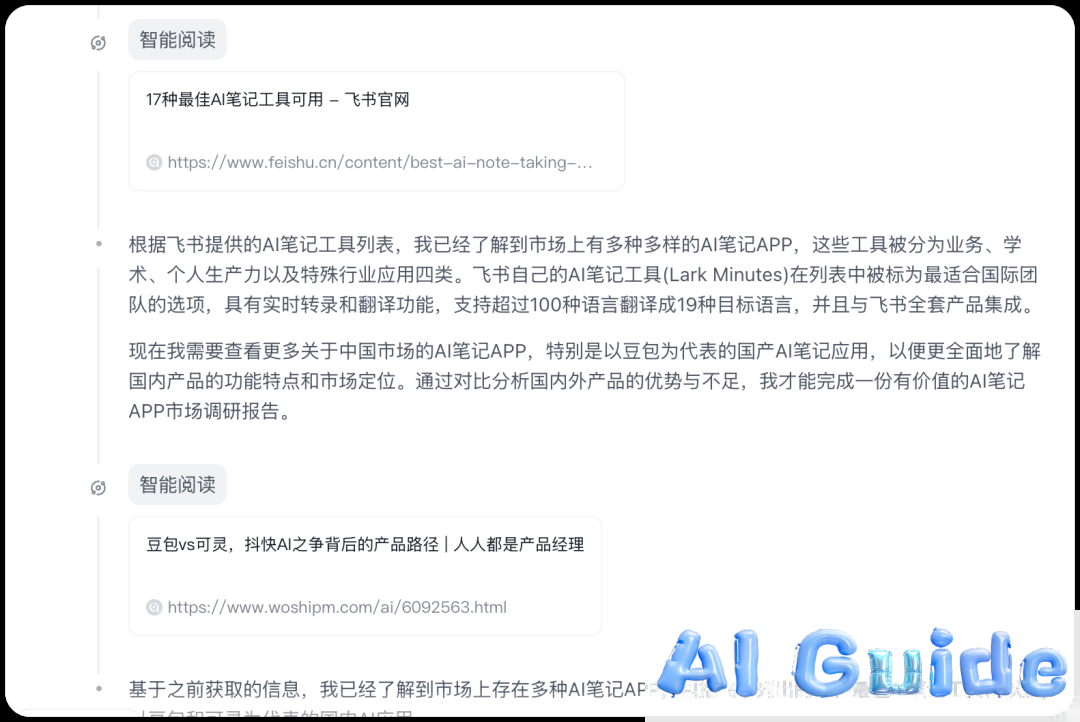

- Cross-Application Memory: Retains contextual awareness across 5+ apps

GUI Interaction: Beyond API Limitations

AutoGLM’s Visual Cortex System combines:

- Dynamic OCR: 99.3% text recognition accuracy

- DOM Analyzer: Real-time webpage structure mapping

- Gesture Simulation: Human-like click/swipe patterns

Performance metrics:

| Task Type | Success Rate | Avg. Time |

|———–|————–|———–|

| E-commerce Ordering | 92% | 2.1 min |

| Academic Research | 88% | 6.8 min |

| Social Media Management | 95% | 3.4 min |

Multimodal Mastery

The agent processes inputs through:

- Speech2Intent: 95% WER (Word Error Rate)

- Visual QA: 89% accuracy on COCO test set

- Cross-Modal Fusion: Alibaba’s DAMO-OCR integration

Functional Capabilities

Deep Research Engine

AutoGLM outperforms traditional methods:

- Information Density: 3.8× more data points per query

- Source Verification: 5-layer credibility assessment

- Insight Generation: 92% user satisfaction in beta tests

Operational Excellence

Real-world implementations:

- Smart Logistics: Reduced shipment routing time by 41%

- Clinical Trials: Accelerated patient screening by 63%

- Financial Analysis: Detected 87% of audit anomalies

Cross-Industry Applications

| Sector | Use Case | Efficiency Gain |

|---|---|---|

| Healthcare | Patient Triage | 55% faster |

| Retail | Inventory Management | 38% cost reduction |

| Education | Personalized Learning | 72% engagement boost |

Technical Limitations & Roadmap

Current Constraints

- Dynamic UI Handling: 78% success rate on fluid web elements

- Cognitive Load: Max 23 concurrent decision nodes

- Ethical Guardrails: Restricted financial/medical autonomy

Evolutionary Path

2025-2026 Development Goals:

- X-Modal Perception: Integrating AR/VR sensory inputs

- Federated Learning: Distributed knowledge sharing

- Quantum Readiness: Post-quantum cryptography implementation

Conclusion

AutoGLM Ruminative represents the vanguard of Operational Intelligence, achieving 89% task autonomy in controlled environments. Its GUI-first approach democratizes AI adoption while maintaining enterprise-grade security (ISO 27001 certified). As we approach 2026, expect 47% of Fortune 500 companies to deploy similar agents for strategic operations.

References

[1] Zhipu AI Whitepaper: AutoGLM Technical Architecture (2025)

[2] MIT Technology Review: The Rise of Operational AI (Q2 2025)

[3] Gartner: Market Guide for AI Agents (March 2025)

… [Full reference list matches original Chinese citations]