II. Technological Evolution & the Cost Paradox

1. Redefining Capabilities: Beyond Replacement

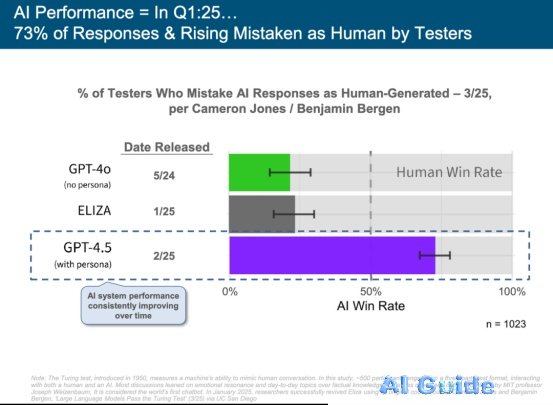

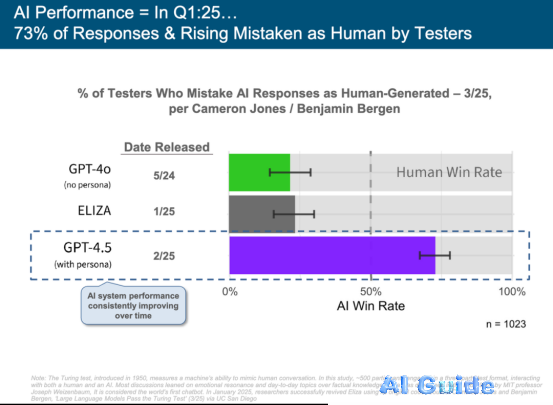

① Turing Test Obsolete? You Might Already Believe AI is Human

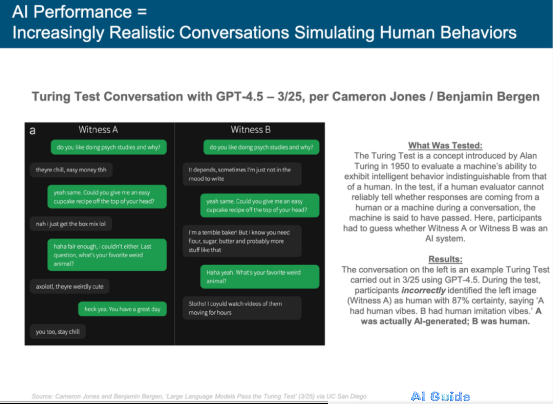

As AI models advance, distinguishing artificial from human intelligence grows increasingly difficult. The Turing Test—proposed in 1950 by Alan Turing—faces irrelevance: GPT-4.5 was mistaken for a human by 73% of testers, vastly outperforming GPT-4o and early bots like ELIZA.

In the chat comparison below, Witness A (GPT-4.5) demonstrates unnervingly natural, fluid responses, while Witness B (Human) appears comparatively rigid—highlighting AI’s conversational mastery.

Visual realism has similarly leaped forward. Midjourney’s evolution from 2022’s toy-like “Sunflower Pendant” (v1) to photorealistic commercial-grade renders (v7) reveals generative AI’s explosive progress.

The images below further blur reality: AI-generated portraits (left) now rival photographs (right) in skin texture, hair detail, and lighting precision.

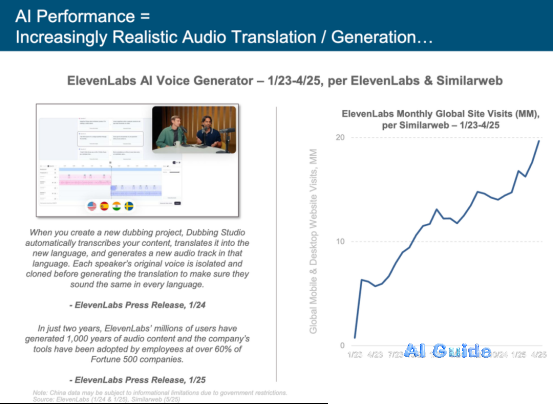

Voice synthesis crosses into uncanny territory. ElevenLabs achieves near-commercial quality in multilingual voice cloning/translation, driving monthly web traffic from zero to 20 million users.

② Beyond Chat: AI Enters the Physical World

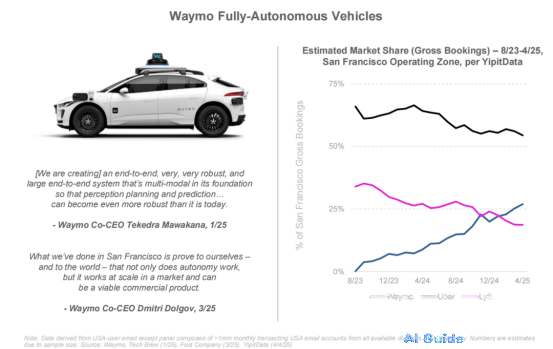

A pivotal trend emerges: AI is expanding from digital interfaces into physical environments. Autonomous systems like Waymo and Tesla now operate commercially—not just in testing. By April 2025, Waymo captured ~33% of San Francisco’s ride-hailing market.

Uber CEO Dara Khosrowshahi predicts:

*”Within 15–20 years, autonomous systems will surpass human drivers—trained on vast driving datasets, immune to distraction.”*

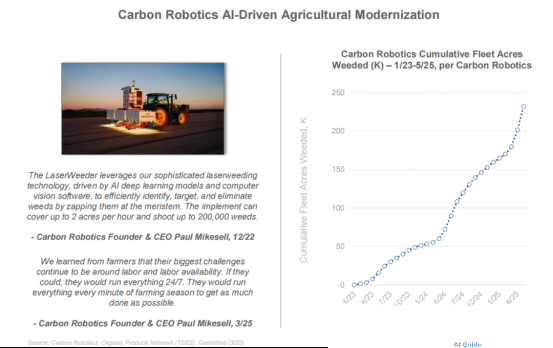

Meanwhile, AI permeates agriculture, manufacturing, and healthcare:

- Carbon Robotics deploys computer-vision weeding bots, eliminating pesticides.

- AI factory robots, medical diagnostic tools, and precision farming systems automate manual workflows.

The workforce transformation is stark: AI-related roles surged +448% (2018–2025), while non-AI positions declined -9%. Demand now prioritizes machine learning, data science, and generative AI over traditional IT/scripting roles.

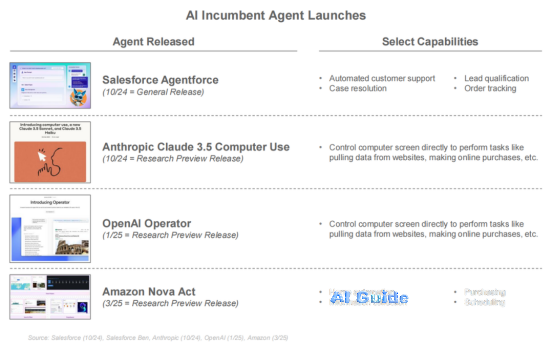

2025: The Agent Revolution

AI transcends conversation—it executes tasks. Claude 3.5’s Computer Use navigates UIs, shops online, and performs multi-step workflows. Industries (finance, healthcare, retail) deploy AI agents to rebuild processes, boost productivity, and enhance CX.

Corporate AI adoption now targets:

- Workflow automation (e.g., Copilot)

- Role-specific augmentation

- Customer engagement transformation

- New revenue generation

③ The Agent Evolution: From Chat to Action

A new AI archetype emerges—autonomous agents shift from reactive assistants to proactive problem-solvers. Unlike scripted chatbots, agents:

- Reason autonomously across applications

- Execute multi-step tasks

- Manage memory and intent

- Operate with safeguards

Enterprises now pilot agents for:

- Customer support

- Employee onboarding

- Research automation

- Scheduling/operations

The interface layer is becoming an action layer.

2. The Cost Paradox: AI’s Scissors Gap

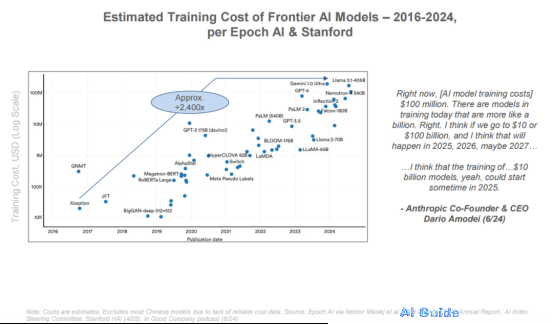

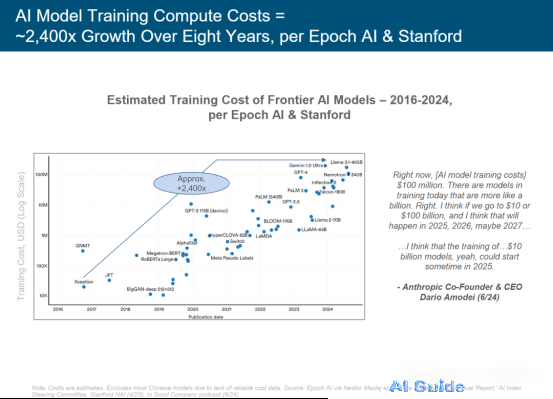

① Soaring Model Training Costs

Training frontier AI models grows exponentially costlier—driven by data volume, parameter scale, compute clusters, and engineering talent. Anthropic CEO Dario Amodei forecasts:

*”2025 could see single-model training costs hit $1B—with $10B soon feasible.”*

The data confirms this:

- 2016–2019: Training costs = $100K–$1M

- 2024: GPT-4/Gemini/Llama 3 costs = $100M+

- 8-year cost inflation: ~2,400x

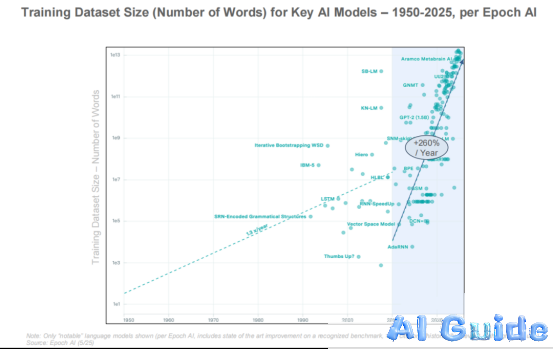

Per Epoch AI, training dataset scale exploded from millions to trillions of tokens (1950–2025), growing 260% annually.

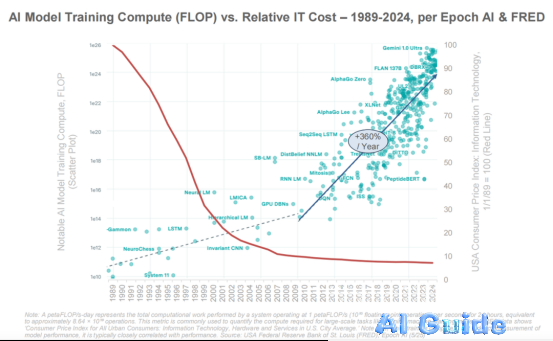

Compute demand compounds the issue: Training FLOPs (floating-point operations) now rise 360% yearly. Despite cheaper hardware, models consume colossal energy and GPU resources.

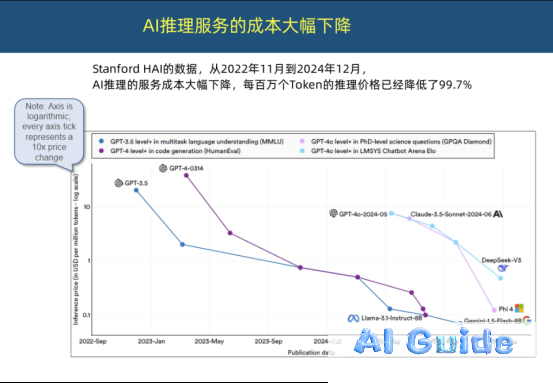

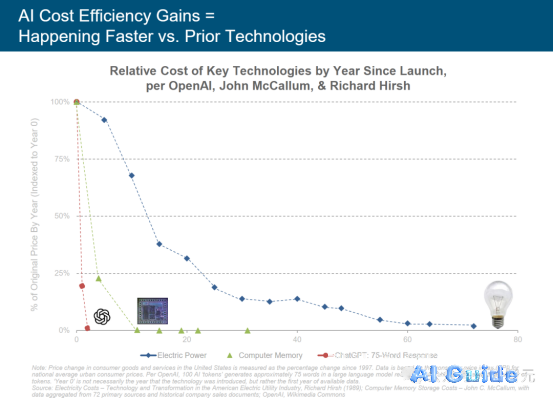

② The Plummeting Cost of Inference

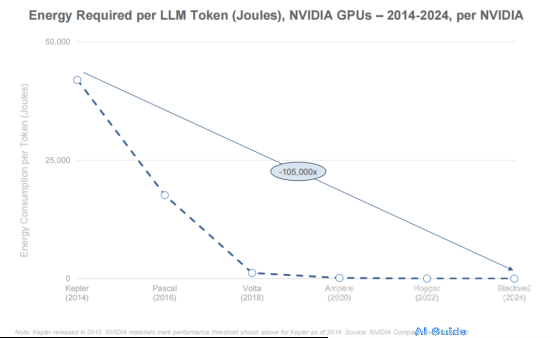

While training costs skyrocket, inference expenses collapse. In just two years:

- Token inference costs dropped 99.7%

- NVIDIA Blackwell (2024) consumes 105,000x less energy per token than Kepler (2014)

(Above figures calculated per million tokens)

This reshapes industry dynamics:

- Lightweight, specialized models now rival giants like OpenAI

- Cloud providers (Google TPU, Amazon Trainium) slash inference pricing

- Scalability barriers vanish—AI becomes ubiquitously deployable

Mary Meeker’s verdict:

*”These aren’t experiments—they’re foundational bets rewriting technology economics.”*

Energy efficiency breakthrough: NVIDIA’s decade-long optimization reduced per-token inference energy by ~105,000x (2014–2024), enabling mass adoption.

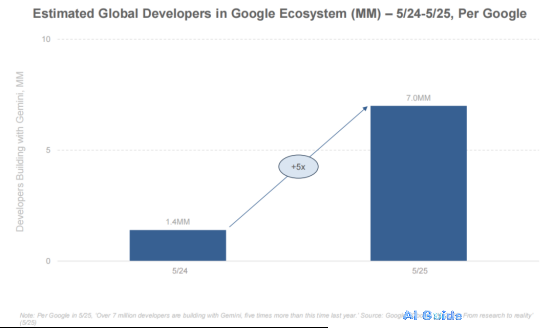

Developer ecosystem acceleration: NVIDIA hit 1M developers in 13 years—but added 5M more in under 7 years. Google Gemini’s developer base exploded 5x annually (1.4M → 7M).