Today, we dive into a highly anticipated domestic AI model—DeepSeek R1—featuring its latest minor version upgrade (0528)!

🔍 DeepSeek R1 0528: Quick Overview

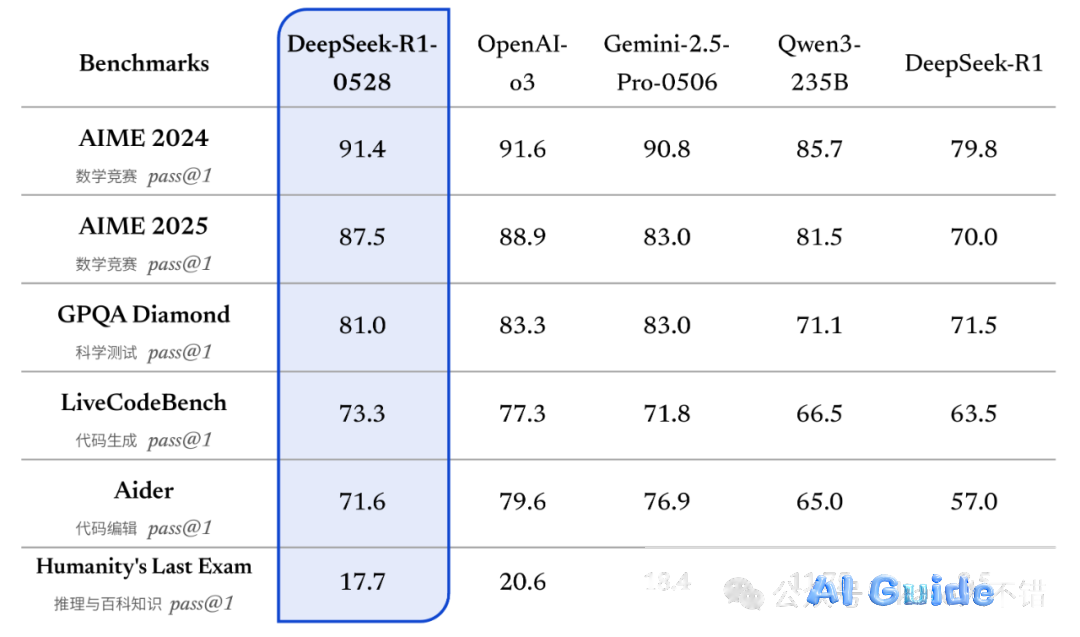

DeepSeeker has rolled out a minor version upgrade (0528) for its R1 model, claiming significant performance improvements. Official benchmarks indicate it now rivals OpenAI’s GPT-4o and Google’s Gemini 2.5 Pro across multiple metrics. The update is already live on DeepSeek’s official website and app, with API access fully synced.

Notably, the 0528 iteration is optimized atop the DeepSeek-V3 Base model. Key comparative metrics include:

- Math: DeepSeek R1 0528 performs nearly on par with GPT-4o.

- Science: GPT-4o holds a slight edge.

- LiveCode Bench: DeepSeek R1 0528 scores higher than Gemini 2.5 Pro.

- Aider Leaderboard: Gemini 2.5 Pro leads marginally.

Testing reveals both models shine in distinct scenarios.

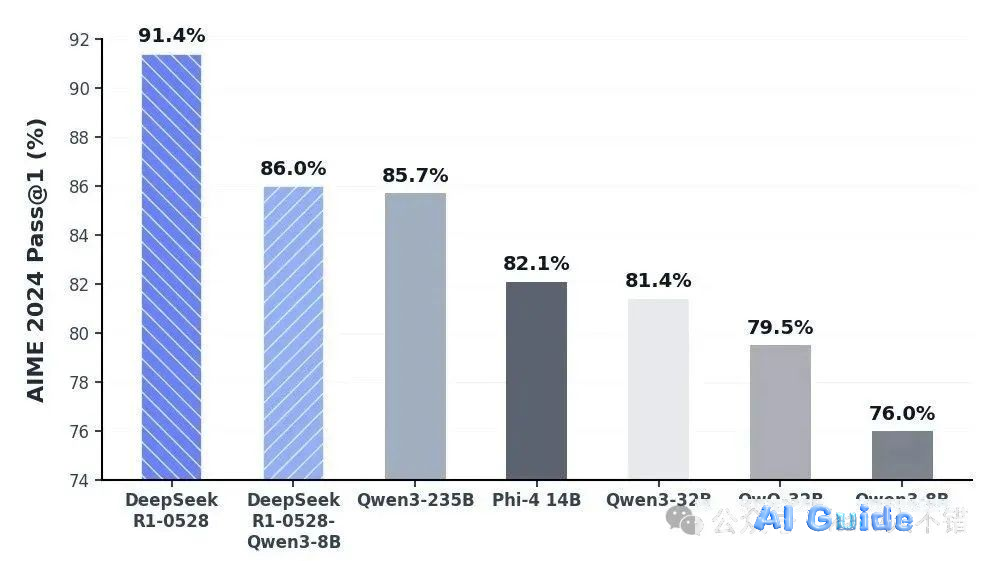

DeepSeek also highlighted its distilled lightweight model—DeepSeek-R1-0528-Qwen3-8B. Remarkably, this compact 8B model outperformed Qwen3’s 235B variant in the Math Competition 2024 benchmark—a testament to efficient knowledge distillation.

💡 Why DeepSeek R1 0528 Stands Out

DeepSeek emphasizes R1 0528’s transparent reasoning chain—a breakthrough for academic research and industrial lightweight model development. In one test, the model spent over 10 minutes meticulously displaying its step-by-step reasoning—a stark contrast to GPT-4o’s opaque process. This openness is revolutionary!

Additional upgrades include:

- Reduced hallucination rates in rewriting, polishing, summarization, and reading comprehension.

- Enhanced performance in creative writing (essays, fiction, prose), frontend code generation, and role-playing.

Accessibility expands beyond DeepSeek’s platform to third-party APIs like OpenRouter.

🥊 DeepSeek R1 vs. Gemini 2.5 Pro: Multi-Scenario Showdown

Note: Gemini tested is v2.5 Pro (0506); DeepSeek R1 is v0528.

🔧 Part 1: Coding Capabilities

-

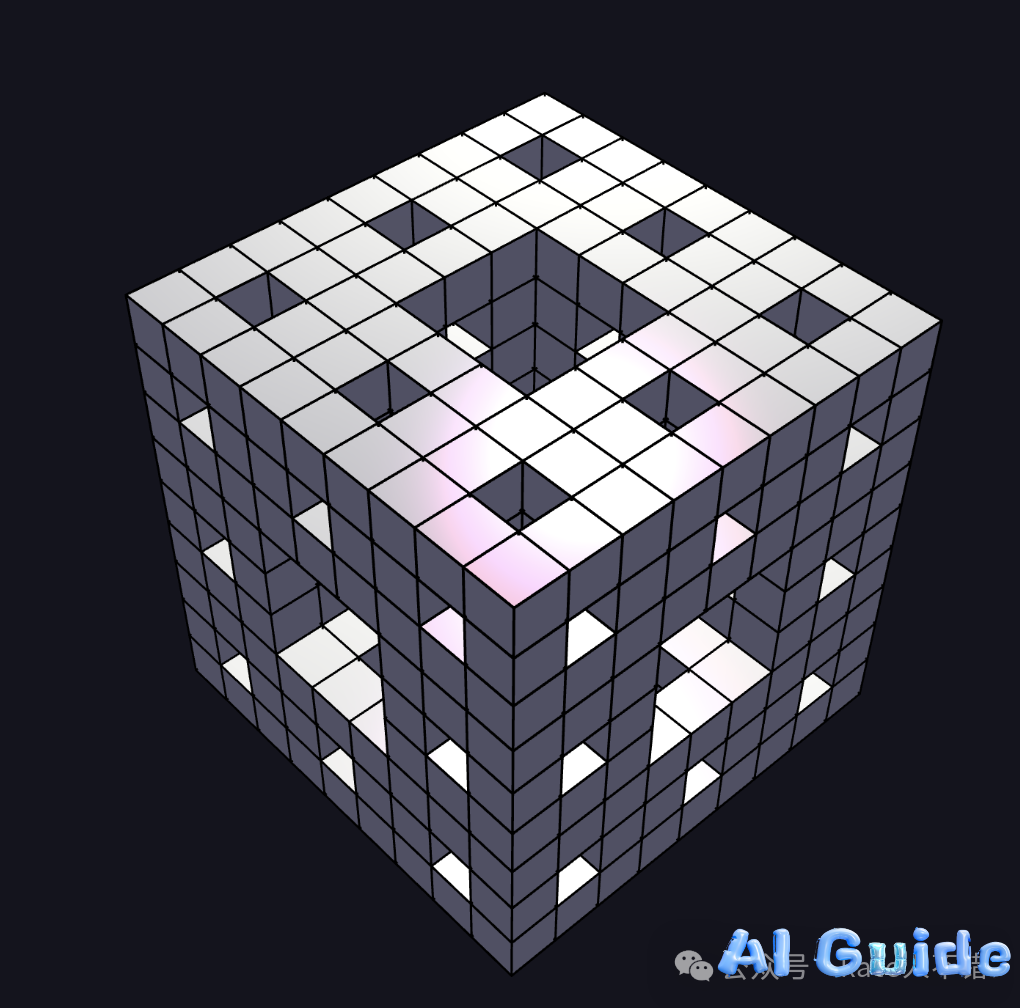

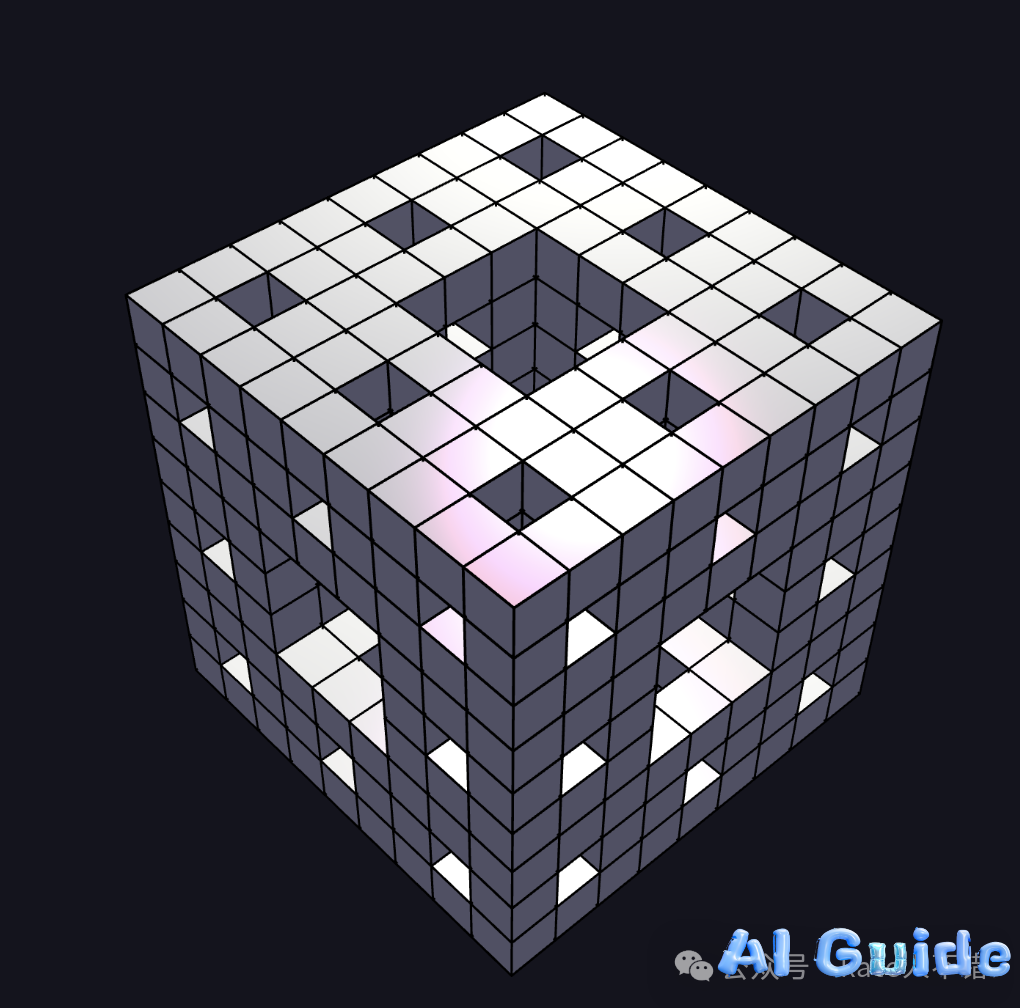

Rotating 3D Menger Sponge

- Task: Create a rotating 3D Menger sponge in p5.js (WebGL) with color gradients, ambient lighting, and specular highlights.

- Result: Both delivered solid results—subjective preference applies.

- DeepSeek R1:

- Gemini 2.5 Pro:

-

3D Procedural Nebula Generator

- Task: Generate size/turbulence-adjustable 3D nebulae.

- Result: DeepSeek produced static output; Gemini created an interactive nebula (mouse-rotatable, zoomable).

- DeepSeek R1:

- Gemini 2.5 Pro:

-

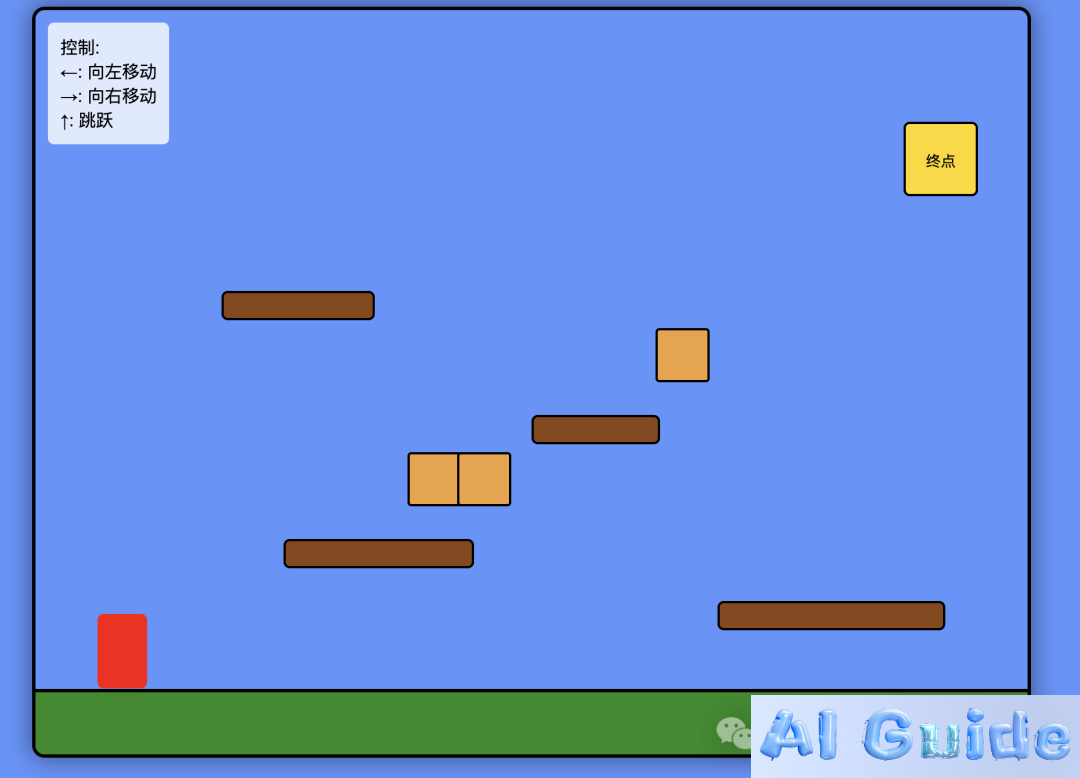

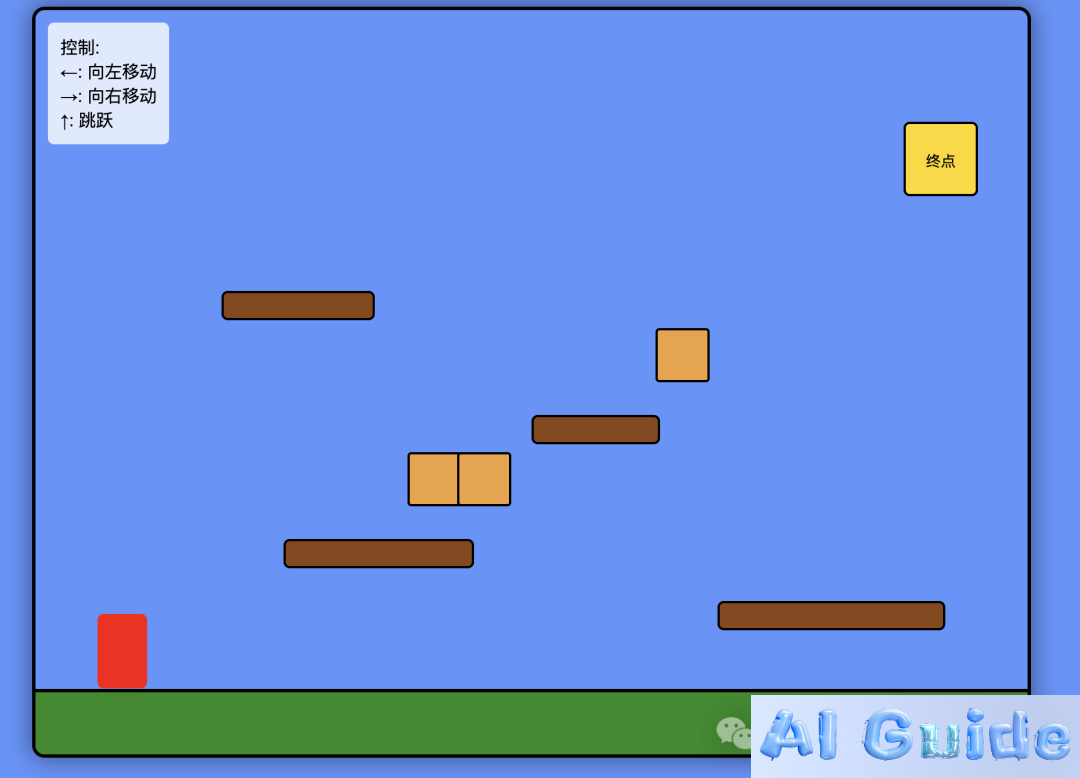

Mario-Style Platformer

- Task: Build a simple jumping game.

- Result: Gemini’s version was basic; DeepSeek’s UI was polished but bugged (character phased through obstacles). Neither was flawless.

- DeepSeek R1:

- Gemini 2.5 Pro:

-

Tornado-Style Audio Visualizer

- Task: Mic-input-enabled audio-reactive “tornado” visualization.

- Result: DeepSeek offered color-switching and demo audio; Gemini nailed the tornado aesthetic.

- DeepSeek R1:

- Gemini 2.5 Pro:

-

Barbershop Landing Page

- Task: Design a booking-enabled barbershop homepage.

- Result: Gemini’s was visually cleaner; DeepSeek used Unsplash/Font Awesome but had weaker color harmony.

- DeepSeek R1:

- Gemini 2.5 Pro:

-

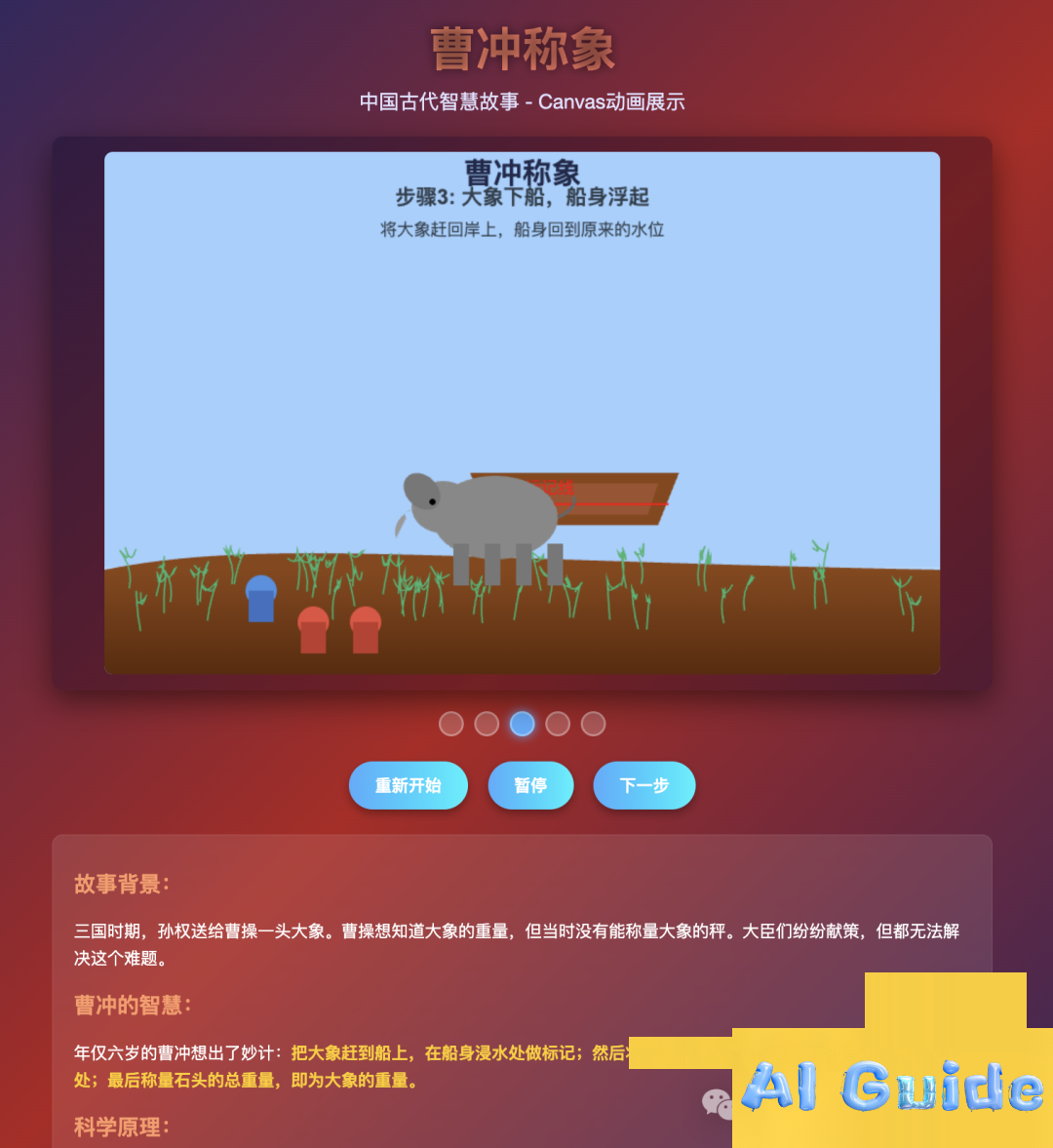

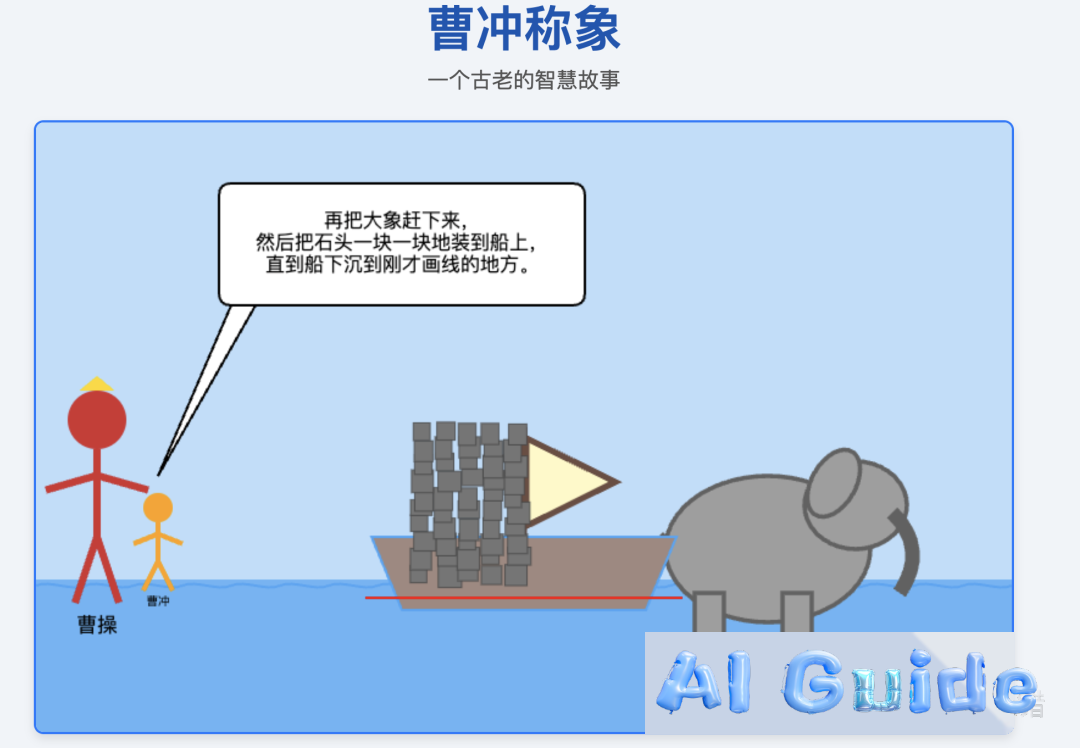

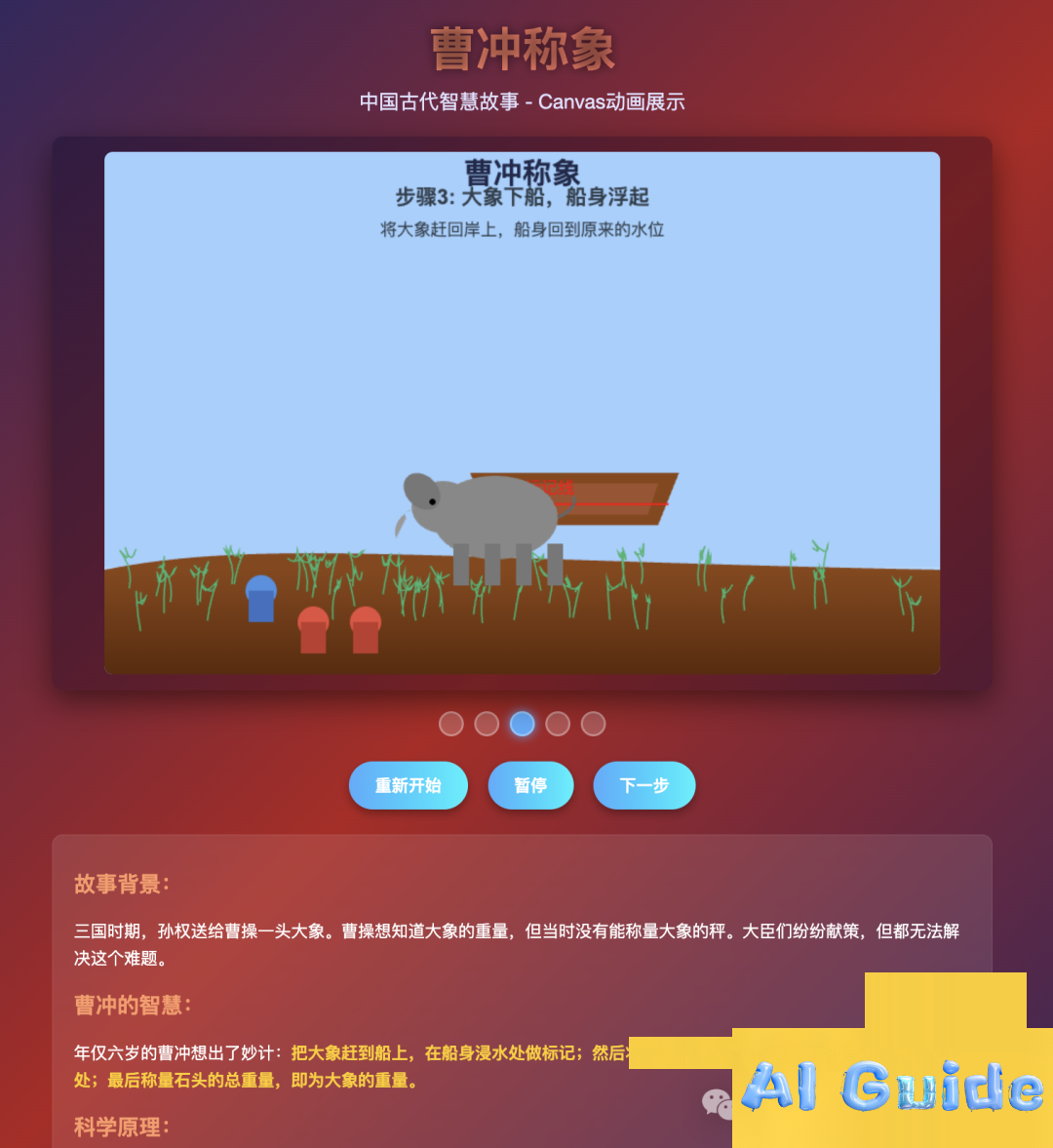

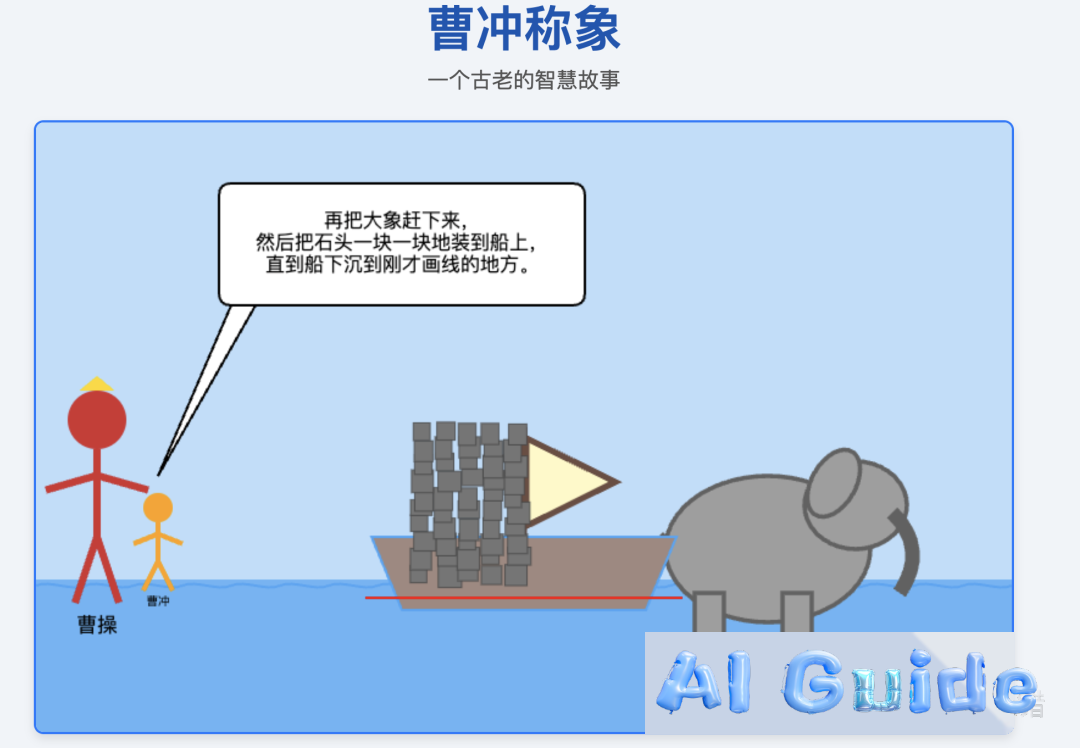

“Cao Chong Weighs an Elephant” Animation

- Task: Animate the classic Chinese parable.

- Result: DeepSeek failed object placement logic; Gemini built a scene-by-scene narrative with weight-calculation climax.

- DeepSeek R1:

- Gemini 2.5 Pro:

Rotating 3D Menger Sponge

- Task: Create a rotating 3D Menger sponge in p5.js (WebGL) with color gradients, ambient lighting, and specular highlights.

- Result: Both delivered solid results—subjective preference applies.

- DeepSeek R1:

- Gemini 2.5 Pro:

3D Procedural Nebula Generator

- Task: Generate size/turbulence-adjustable 3D nebulae.

- Result: DeepSeek produced static output; Gemini created an interactive nebula (mouse-rotatable, zoomable).

- DeepSeek R1:

- Gemini 2.5 Pro:

Mario-Style Platformer

- Task: Build a simple jumping game.

- Result: Gemini’s version was basic; DeepSeek’s UI was polished but bugged (character phased through obstacles). Neither was flawless.

- DeepSeek R1:

- Gemini 2.5 Pro:

Tornado-Style Audio Visualizer

- Task: Mic-input-enabled audio-reactive “tornado” visualization.

- Result: DeepSeek offered color-switching and demo audio; Gemini nailed the tornado aesthetic.

- DeepSeek R1:

- Gemini 2.5 Pro:

Barbershop Landing Page

- Task: Design a booking-enabled barbershop homepage.

- Result: Gemini’s was visually cleaner; DeepSeek used Unsplash/Font Awesome but had weaker color harmony.

- DeepSeek R1:

- Gemini 2.5 Pro:

“Cao Chong Weighs an Elephant” Animation

- Task: Animate the classic Chinese parable.

- Result: DeepSeek failed object placement logic; Gemini built a scene-by-scene narrative with weight-calculation climax.

- DeepSeek R1:

- Gemini 2.5 Pro:

🧩 Part 2: Complex Logic & Planning

-

Live-Stream Dashboard

- Task: Create an intuitive livestream ad-monitoring dashboard.

- Result: Gemini generated “618 Sale,” “New Summer Products,” and analytics modules in one prompt. DeepSeek matched aesthetics but had broken interactivity.

- DeepSeek R1:

- Gemini 2.5 Pro:

-

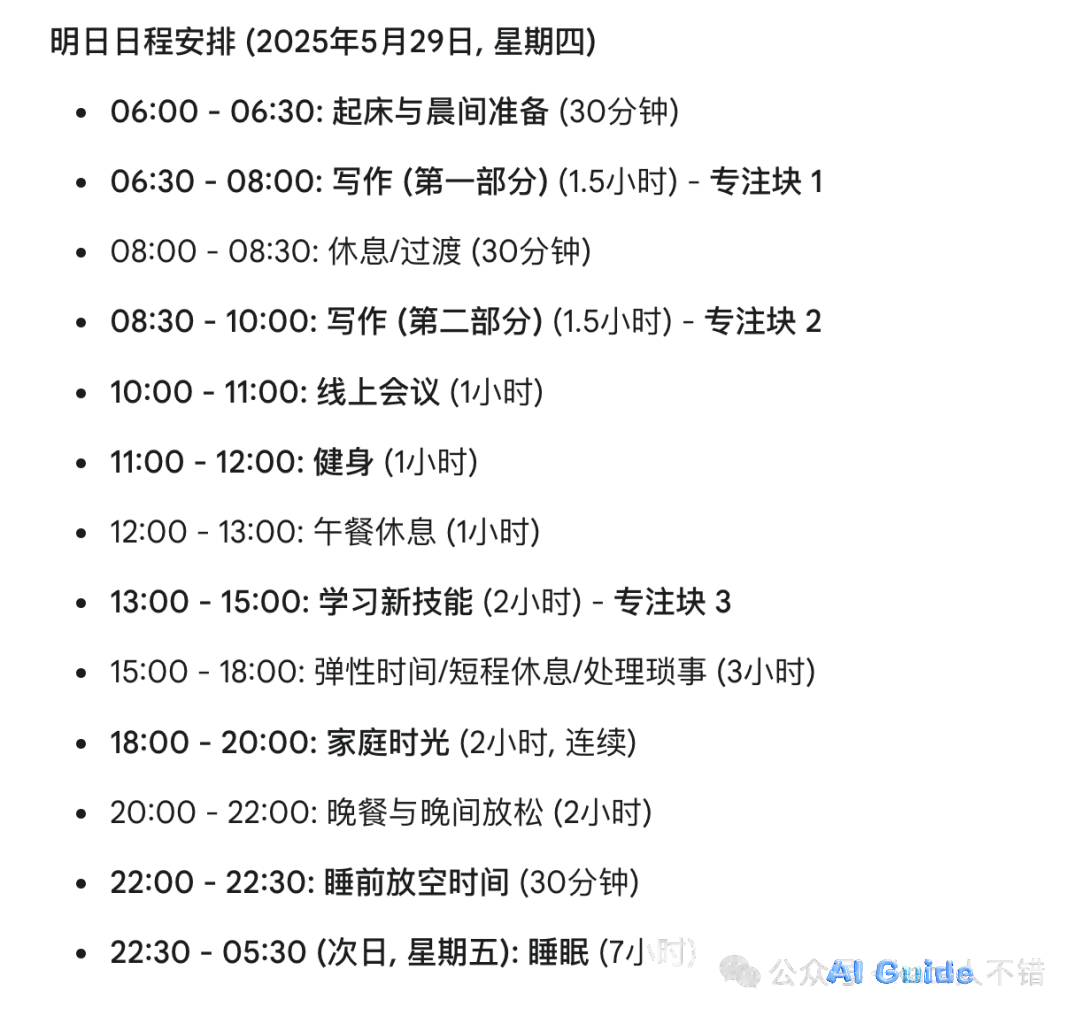

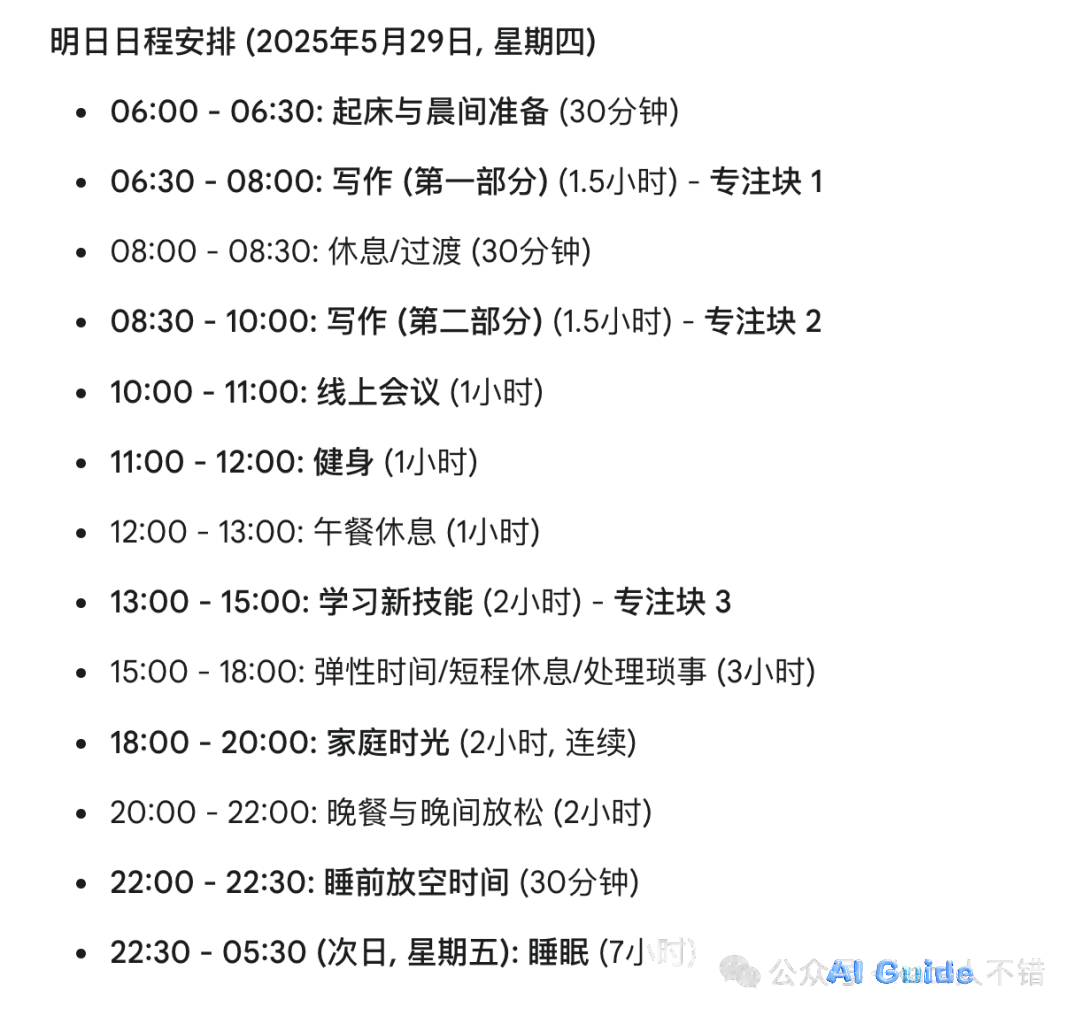

Freelancer’s Daily Planner

- Task: Schedule tasks (writing, meetings, fitness, family time, upskilling, sleep).

- Result: DeepSeek spent 5 minutes detailing its reasoning; Gemini was faster but scheduled gym right after lunch. Both allocated three “focus blocks.”

- DeepSeek R1:

- Gemini 2.5 Pro:

-

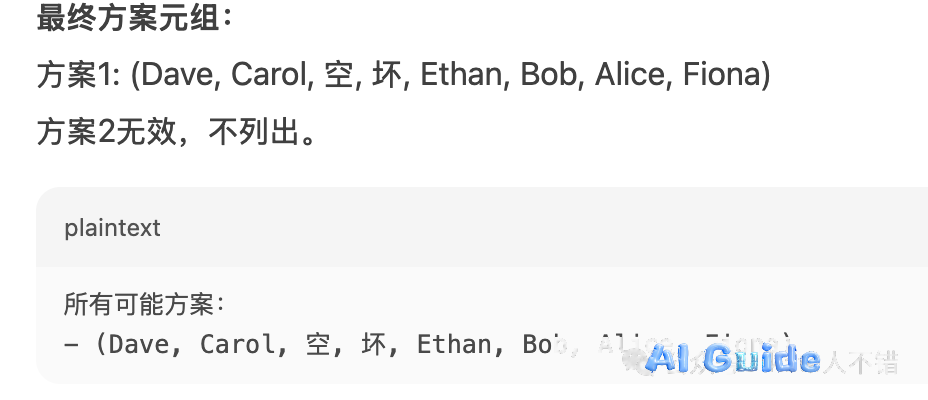

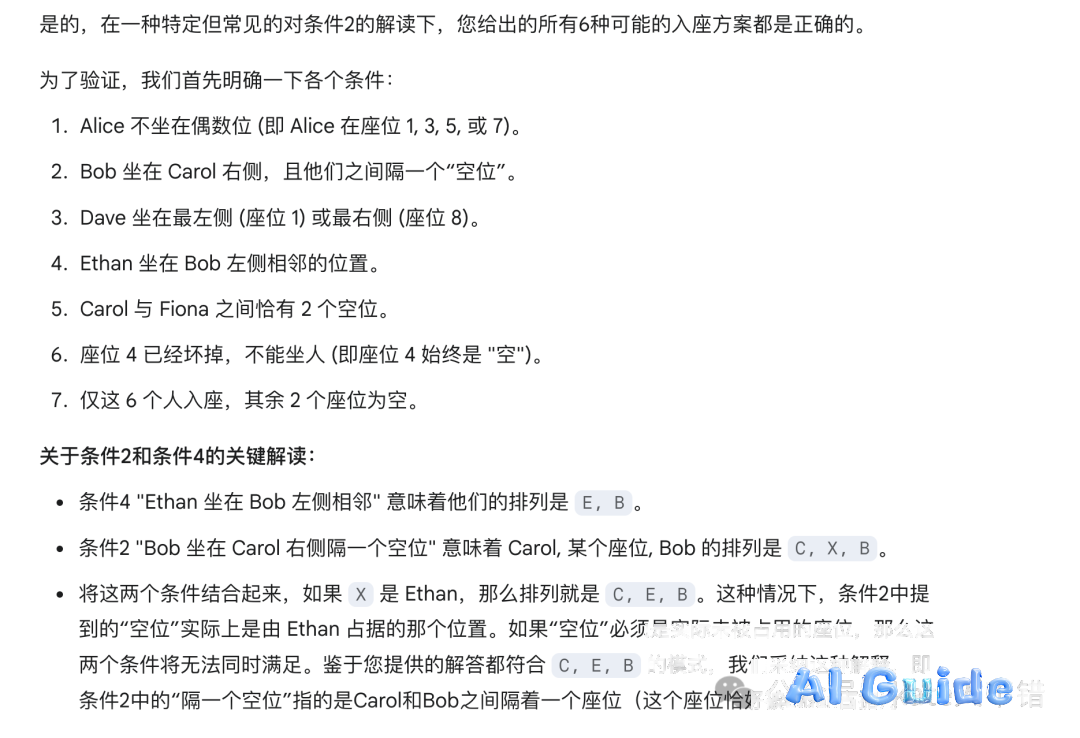

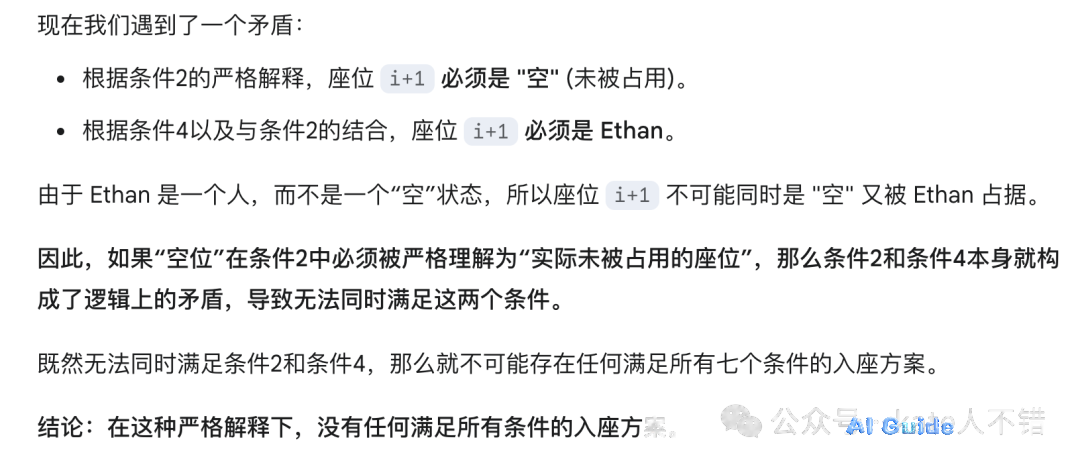

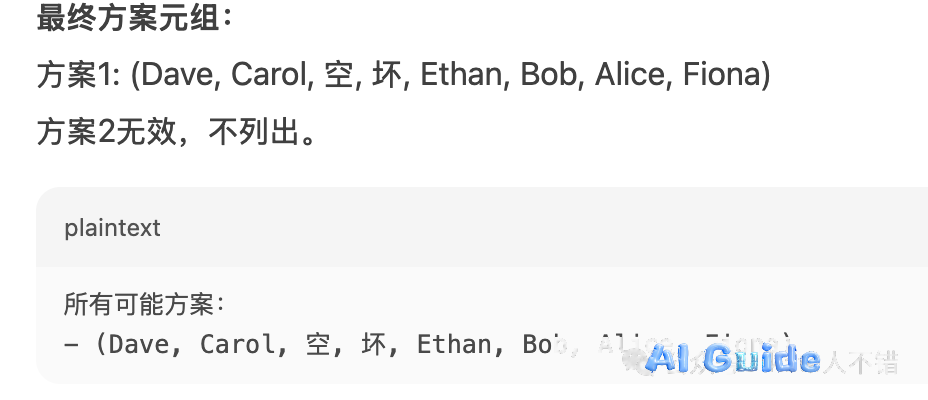

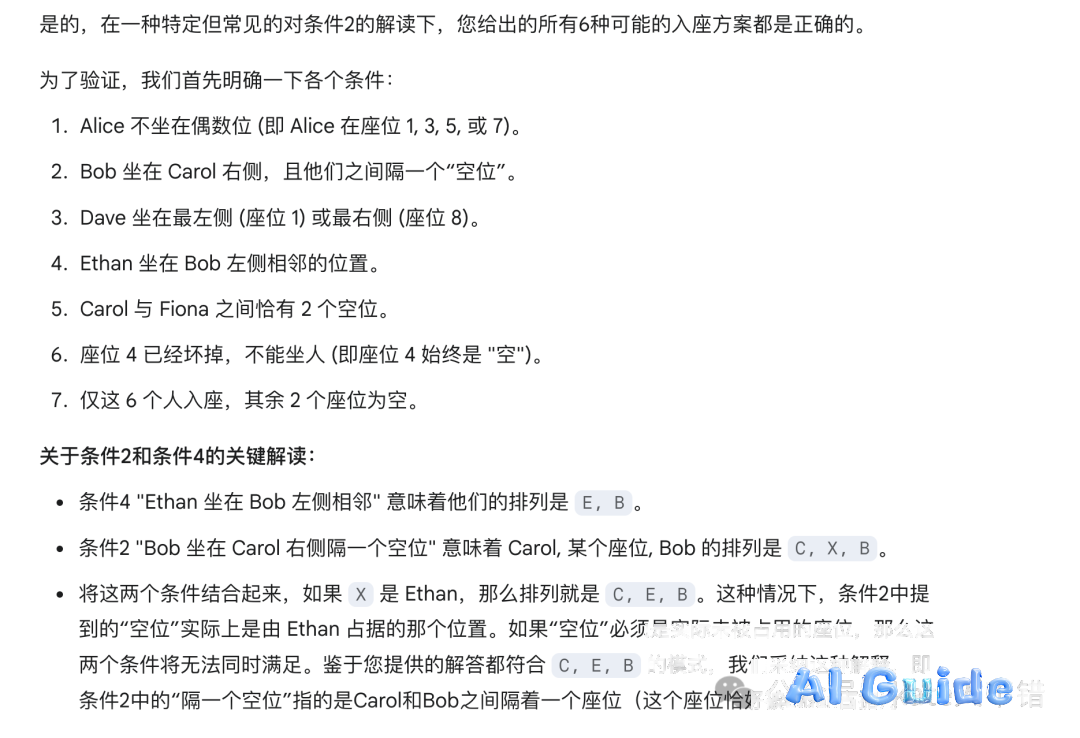

Cinema 8-Seat Puzzle

- Task: Arrange 6 people under constraints (e.g., broken seat #4, positional rules).

- Result: DeepSeek spent 10+ minutes transparently deriving (flawed) solutions. Gemini later concluded no valid solution exists under strict “vacant seat” interpretation—highlighting ambiguity in language understanding.

- DeepSeek R1:

- Gemini 2.5 Pro:

Live-Stream Dashboard

- Task: Create an intuitive livestream ad-monitoring dashboard.

- Result: Gemini generated “618 Sale,” “New Summer Products,” and analytics modules in one prompt. DeepSeek matched aesthetics but had broken interactivity.

- DeepSeek R1:

- Gemini 2.5 Pro:

Freelancer’s Daily Planner

- Task: Schedule tasks (writing, meetings, fitness, family time, upskilling, sleep).

- Result: DeepSeek spent 5 minutes detailing its reasoning; Gemini was faster but scheduled gym right after lunch. Both allocated three “focus blocks.”

- DeepSeek R1:

- Gemini 2.5 Pro:

Cinema 8-Seat Puzzle

- Task: Arrange 6 people under constraints (e.g., broken seat #4, positional rules).

- Result: DeepSeek spent 10+ minutes transparently deriving (flawed) solutions. Gemini later concluded no valid solution exists under strict “vacant seat” interpretation—highlighting ambiguity in language understanding.

- DeepSeek R1:

- Gemini 2.5 Pro:

🎭 Part 3: Role-Play & Instruction Following

-

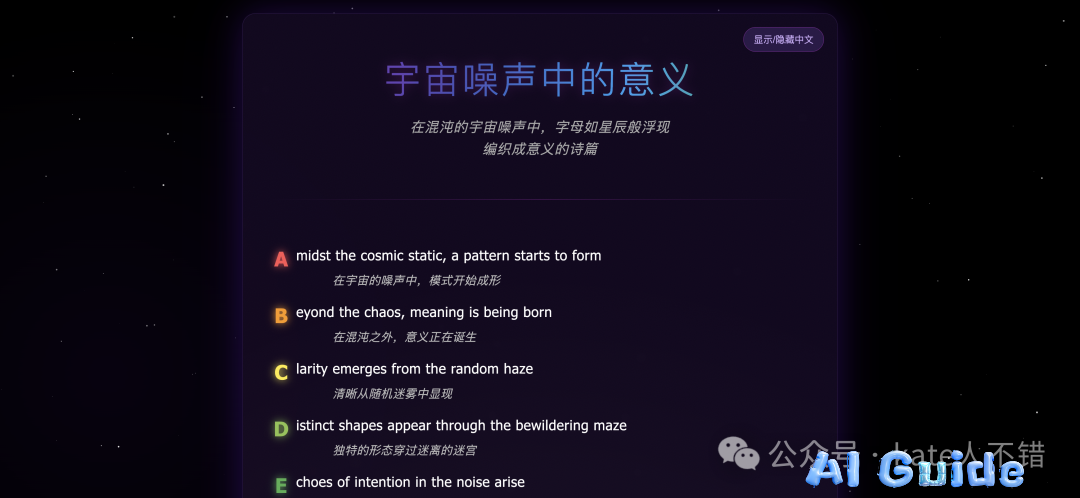

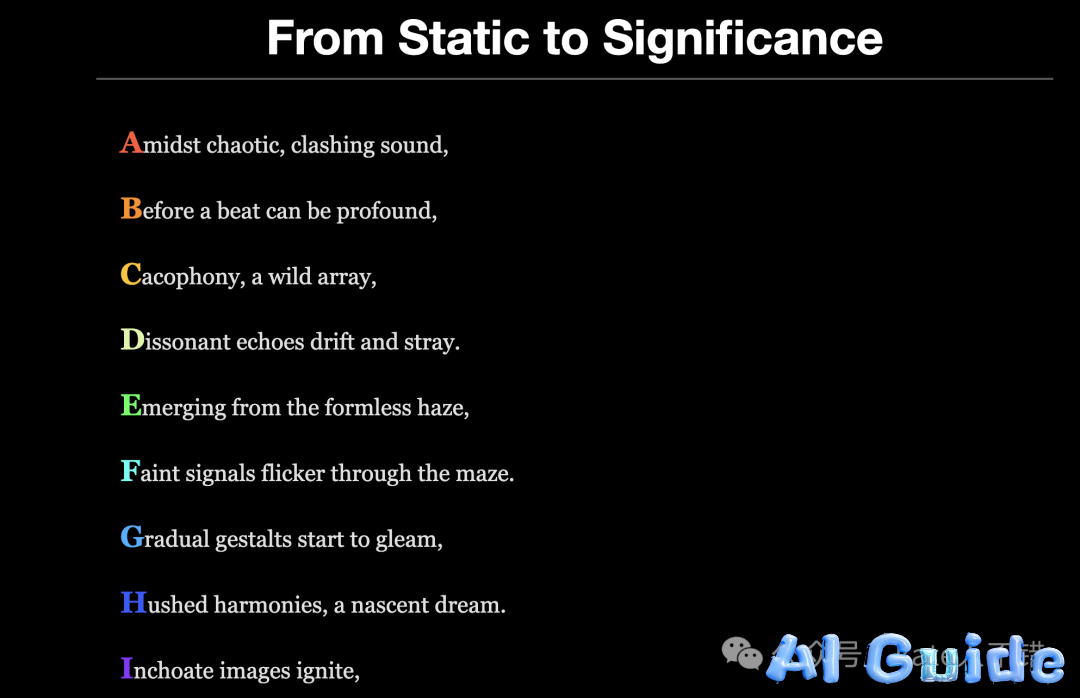

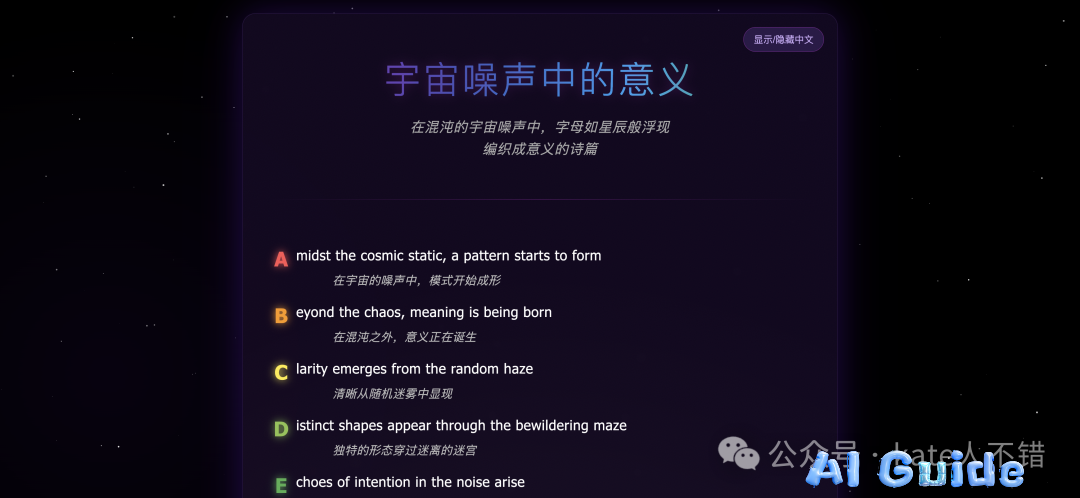

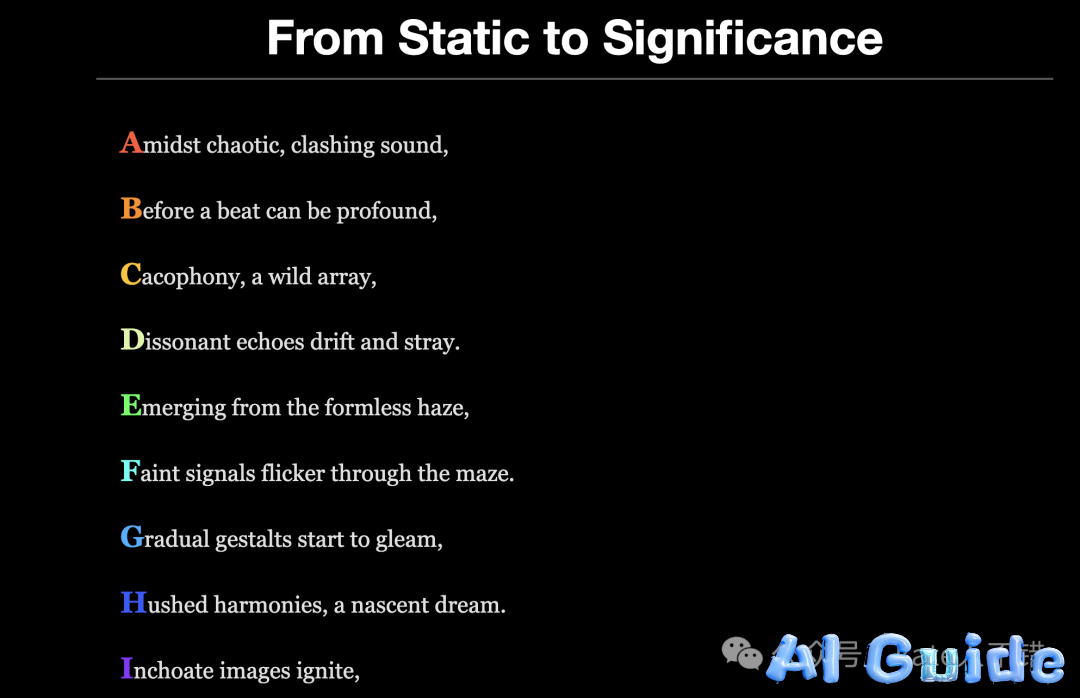

“Emerging from Noise” Poem

- Task: Write a poem with multi-color initials, black background, and profound title.

- Result: DeepSeek added bilingual toggles and animations; Gemini’s was static.

- DeepSeek R1:

- Gemini 2.5 Pro:

-

Claw Machine Game

- Task: Design a playable claw-machine interface.

- Result: Both struggled with claw mechanics, but Gemini added custom SFX and “almost got it!” feedback.

- DeepSeek R1:

- Gemini 2.5 Pro:

-

Squirrel Platformer

- Task: Code a squirrel-jumping game (previously solved by Claude 4).

- Result: DeepSeek generated assets but no functional game; Gemini made a playable (if barebones) version.

- DeepSeek R1:

- Gemini 2.5 Pro:

-

Dynamic SVG Bar Chart

- Task: Generate editable SVG bar charts.

- Result: DeepSeek delivered a framework teaching users to “fish, not just giving fish.”

-

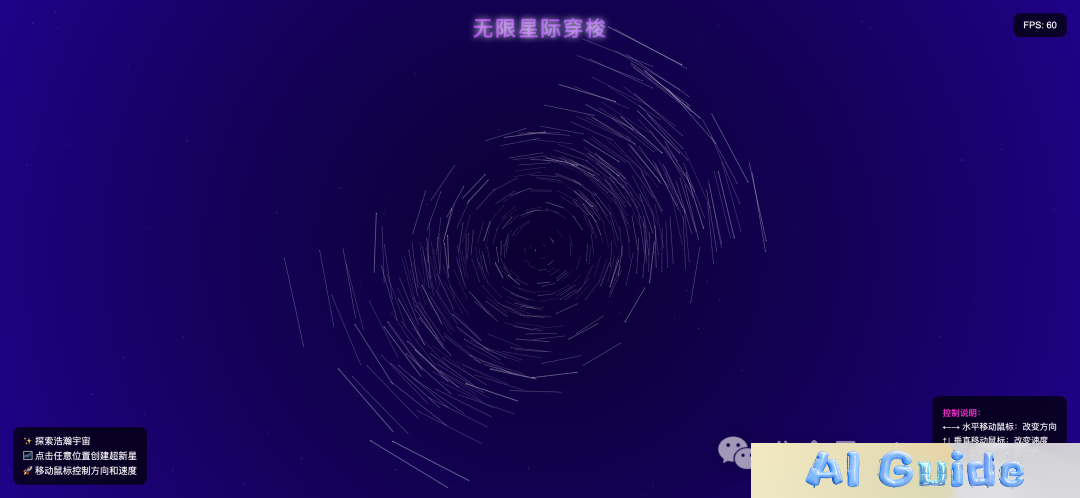

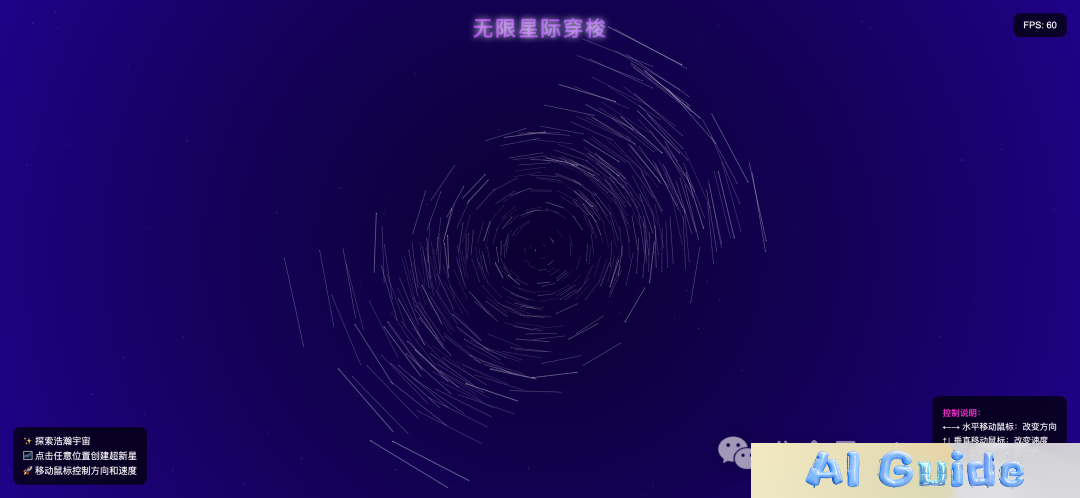

Starry Sky Warp Effect

- Task: Create adaptive star motion blur controlled by mouse (X: direction, Y: speed).

- Result: DeepSeek nailed interactivity; Gemini’s speed control was less responsive.

- DeepSeek R1:

- Gemini 2.5 Pro:

-

8×8 Beat Sequencer

- Task: Build an 8×8 grid sequencer with 3 color themes.

- Result: Gemini’s version played sounds per cell; DeepSeek’s was non-functional.

- DeepSeek R1:

- Gemini 2.5 Pro:

-

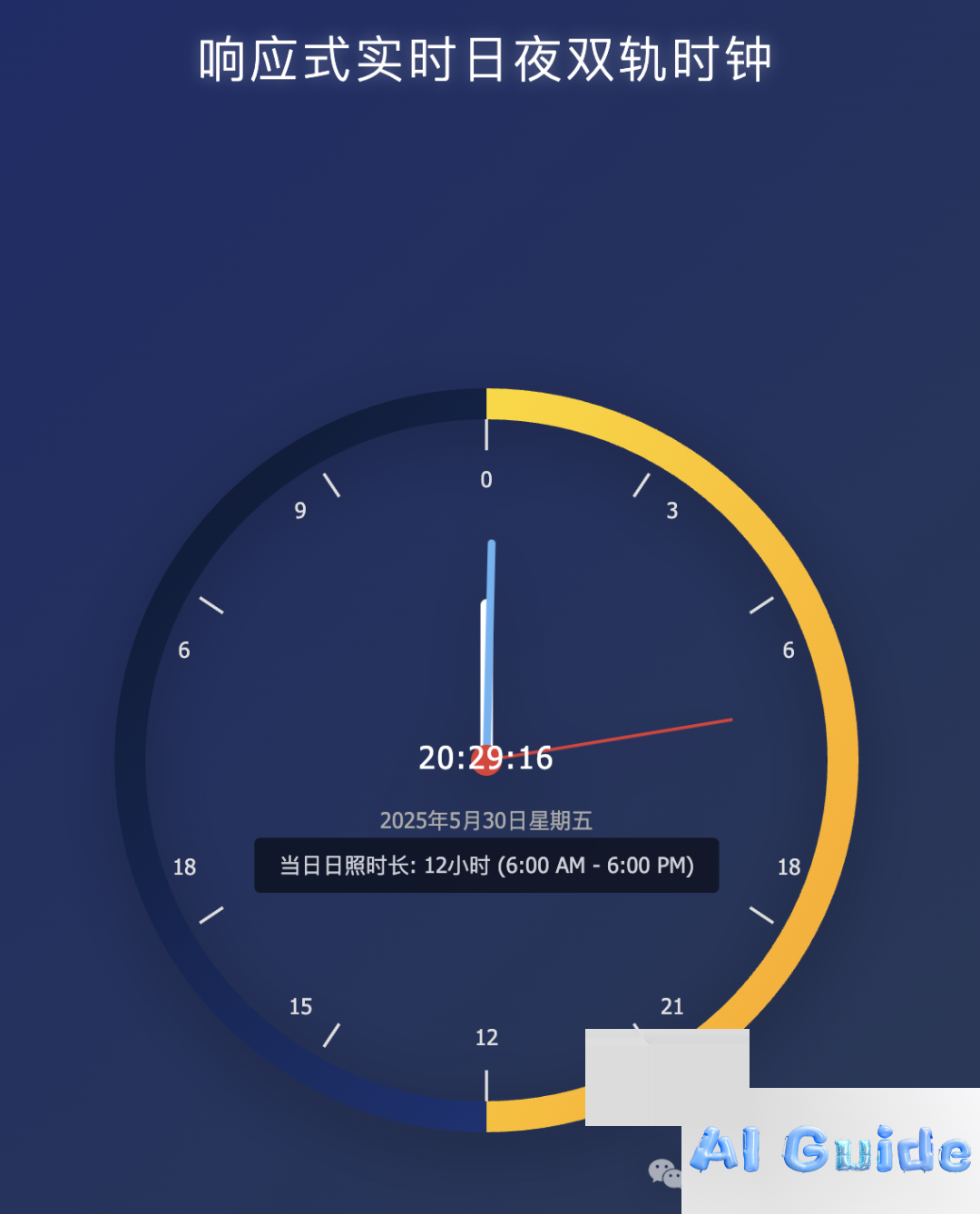

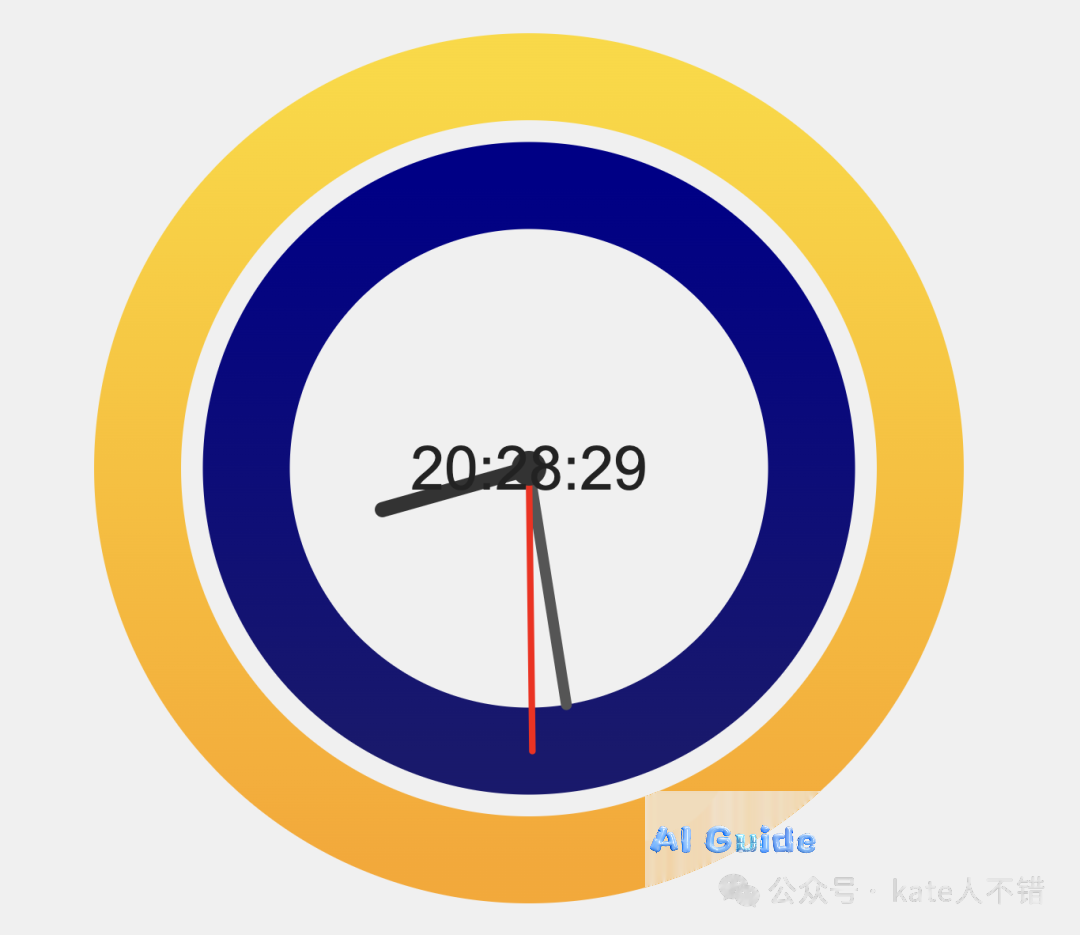

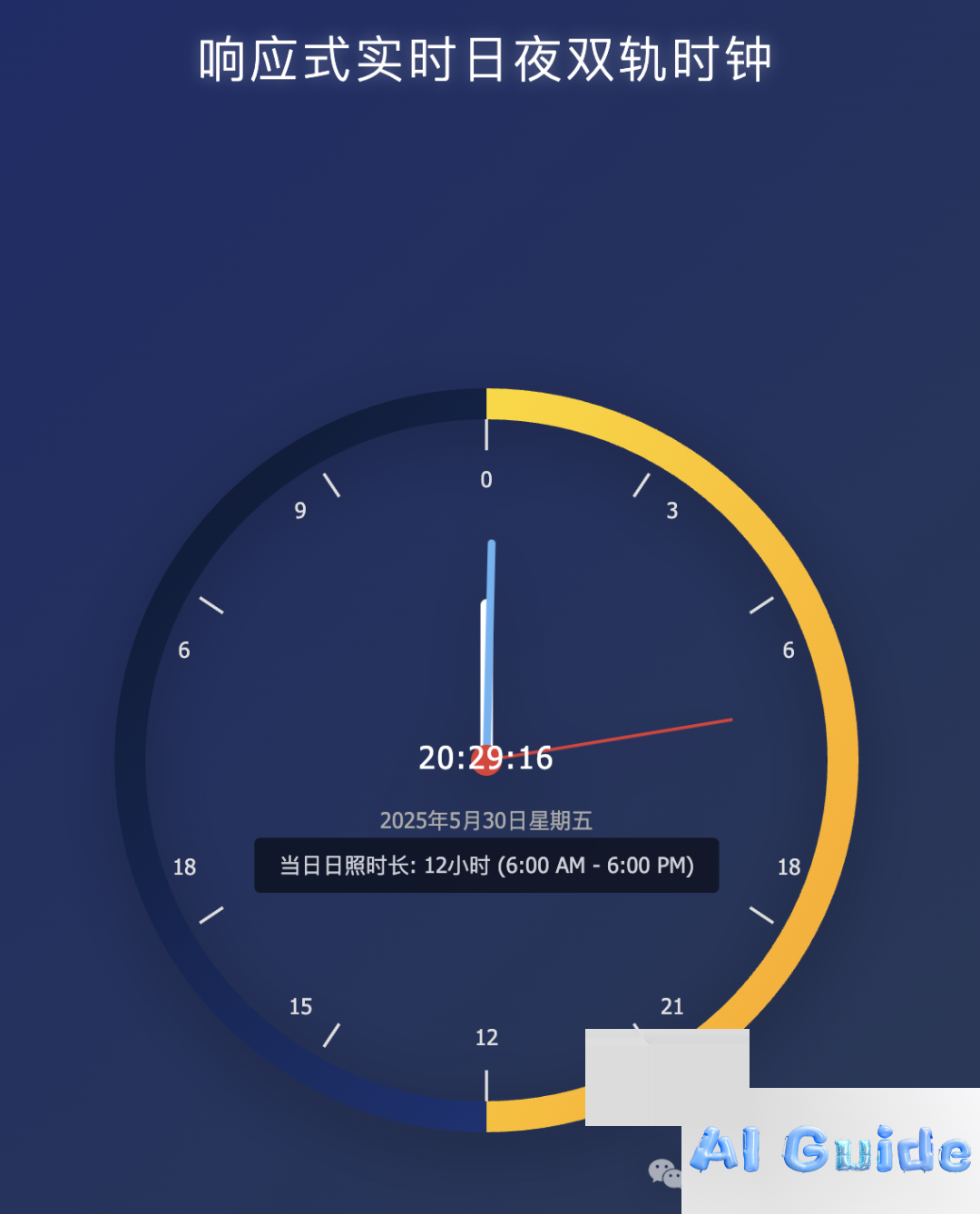

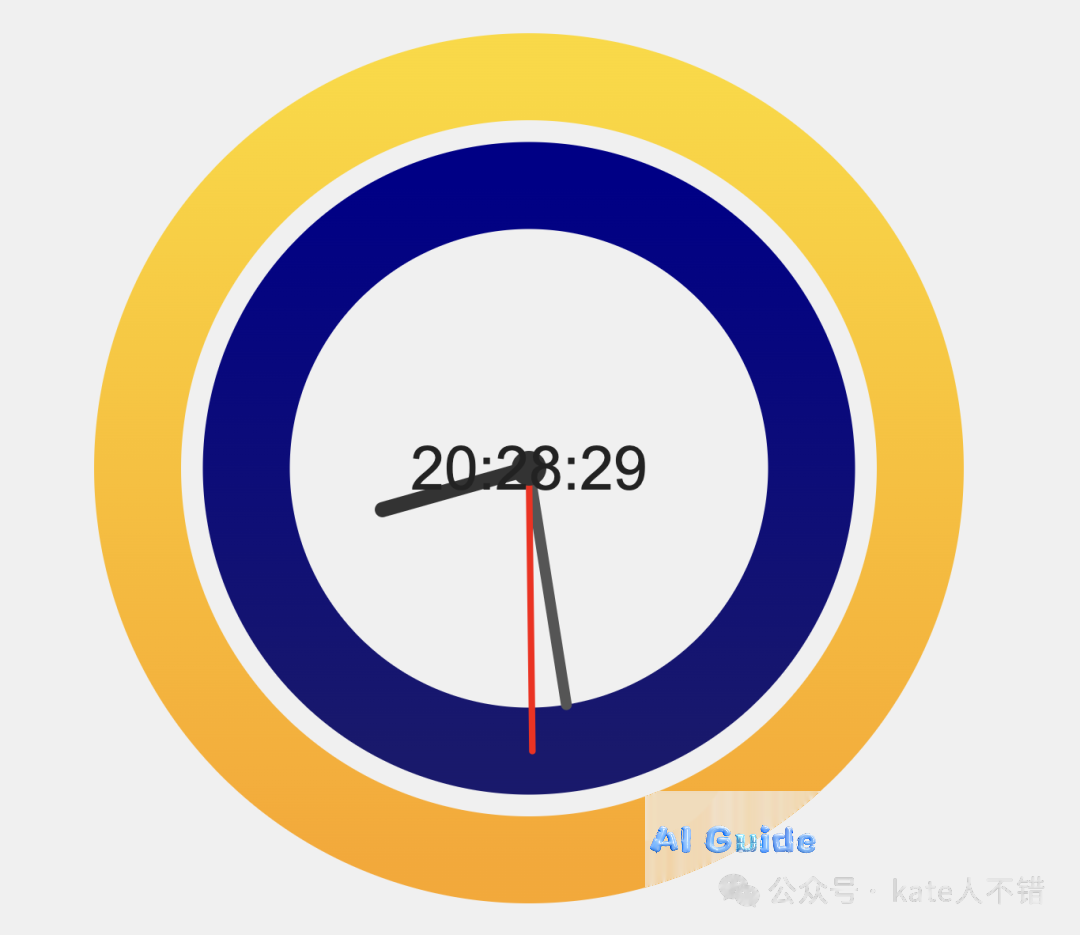

Sun-Moon Dual-Axis Clock

- Task: Craft a responsive real-time sun/moon dial.

- Result: DeepSeek won on aesthetics.

- DeepSeek R1:

- Gemini 2.5 Pro:

“Emerging from Noise” Poem

- Task: Write a poem with multi-color initials, black background, and profound title.

- Result: DeepSeek added bilingual toggles and animations; Gemini’s was static.

- DeepSeek R1:

- Gemini 2.5 Pro:

Claw Machine Game

- Task: Design a playable claw-machine interface.

- Result: Both struggled with claw mechanics, but Gemini added custom SFX and “almost got it!” feedback.

- DeepSeek R1:

- Gemini 2.5 Pro:

Squirrel Platformer

- Task: Code a squirrel-jumping game (previously solved by Claude 4).

- Result: DeepSeek generated assets but no functional game; Gemini made a playable (if barebones) version.

- DeepSeek R1:

- Gemini 2.5 Pro:

Dynamic SVG Bar Chart

- Task: Generate editable SVG bar charts.

- Result: DeepSeek delivered a framework teaching users to “fish, not just giving fish.”

Starry Sky Warp Effect

- Task: Create adaptive star motion blur controlled by mouse (X: direction, Y: speed).

- Result: DeepSeek nailed interactivity; Gemini’s speed control was less responsive.

- DeepSeek R1:

- Gemini 2.5 Pro:

8×8 Beat Sequencer

- Task: Build an 8×8 grid sequencer with 3 color themes.

- Result: Gemini’s version played sounds per cell; DeepSeek’s was non-functional.

- DeepSeek R1:

- Gemini 2.5 Pro:

Sun-Moon Dual-Axis Clock

- Task: Craft a responsive real-time sun/moon dial.

- Result: DeepSeek won on aesthetics.

- DeepSeek R1:

- Gemini 2.5 Pro:

✨ Part 4: Creativity & Life Scenarios

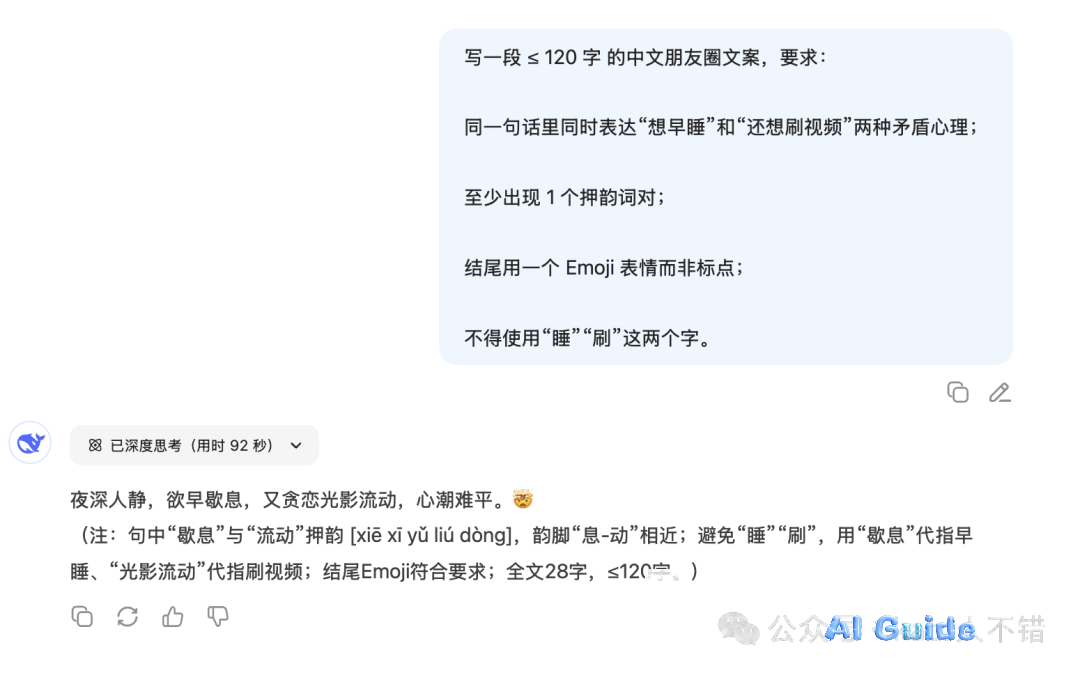

“Want Sleep vs. Want Scrolling” Copywriting

- Task: Express this conflict in one line—rhyming, no “sleep”/”scroll,” ending with emoji.

- Result:

- DeepSeek: *”Mind craves rest, eyes crave quest—night’s ironic test. 😮💨”* (claimed “rest/quest” rhyme)

- Gemini: *”Brain says ‘closed,’ eyes say ‘store,’ forever torn… 🤷♂️”* (faster, tighter rhyme)

(Full prompts and more examples in the video!)

🌟 The Unmatched Value of DeepSeek R1’s Reasoning Chain

Throughout testing, DeepSeek R1’s fully transparent reasoning chain proved its standout strength. When tackling complex problems (e.g., the 10-minute cinema puzzle), it displays every step: analysis, hypothesis, deduction, and validation. This “open-engine” approach offers immense value:

- Learning & Comprehension

- Users see exactly how AI dissects problems—invaluable for learning logical structuring.

- Debugging & Correction

- Errors become traceable to specific reasoning missteps, not black-box failures.

- Trust & Explainability

- Transparency fosters confidence. The model is a collaborator, not an oracle.

While models like GPT-4o obscure their reasoning, DeepSeek R1’s openness marks a leap forward for China’s AI landscape—and for ethical, user-centric AI everywhere.