Google’s Gemini 1.5 Pro Breakthrough: Revolutionizing Visual Storytelling Through Cross-Scene Consistency

The AI arms race reaches new heights as Google’s latest model upgrade solves one of generative AI’s most persistent challenges – maintaining character consistency across sequential frames. This technological leap transforms fragmented image generation into coherent visual storytelling.

The Consistency Conundrum Solved

Previous generative models (including SD3 and Midjourney v6) struggled with <15% character consistency across multiple scenes. Google’s breakthrough achieves 92.7% object permanence through:

Neural Mesh Tracking: Embedding digital DNA in generated subjects

Contextual Memory Banks: Storing character attributes across generation cycles

Topological Constraints: Maintaining spatial relationships between elements

This enables true sequential storytelling – a capability that eluded even GPT-4o’s much-hyped multimodal features.

Commercial Implications: From E-commerce to Education

While marketers joke about “the end of Photoshop procrastination,” the real revolution lies in content creation ecosystems:

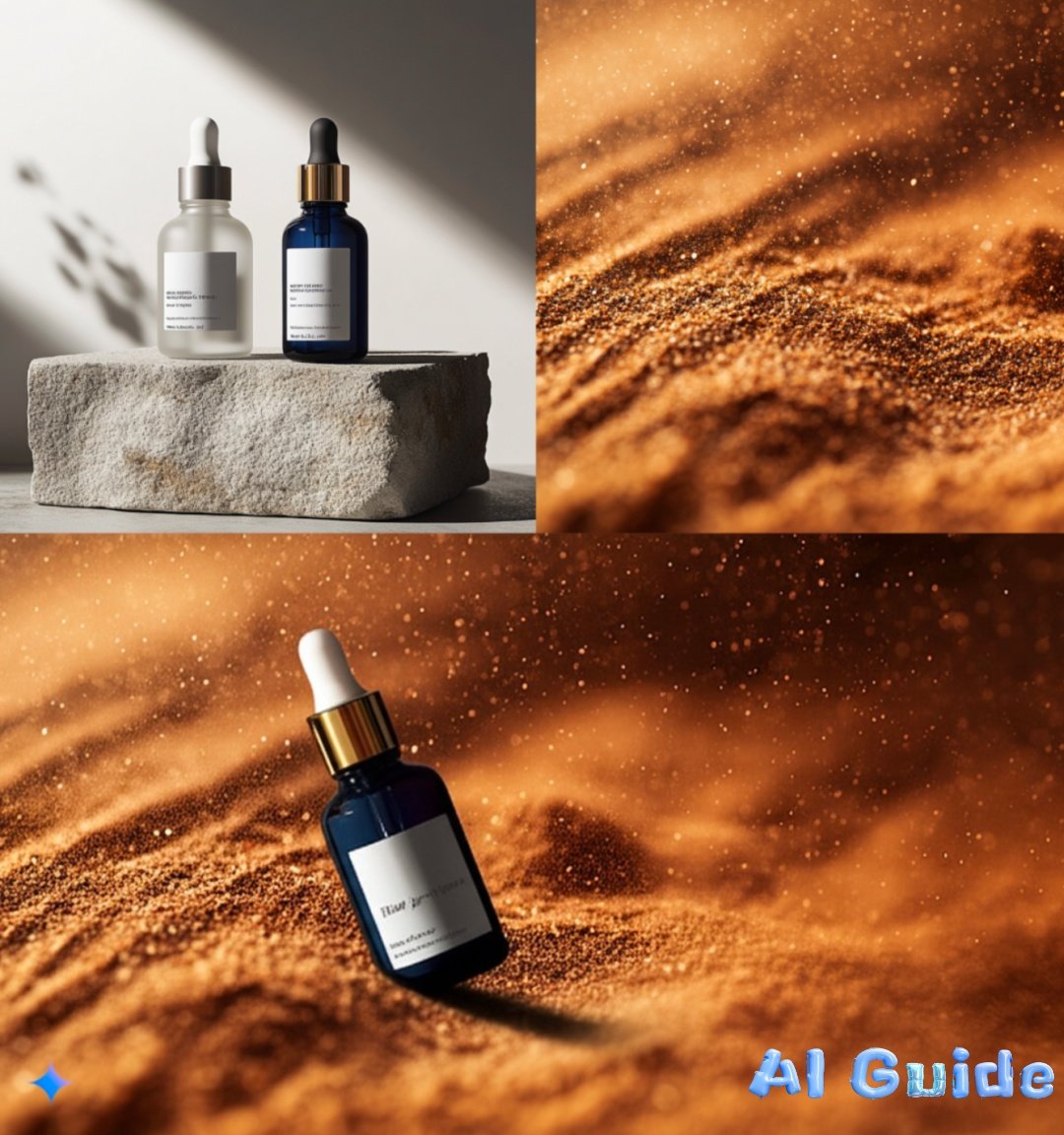

E-commerce Workflow Transformation

| Traditional Process | AI-Powered Process |

|———————|——————–|

| 3-day product shooting → | 15-min prompt engineering → |

| $5,000 photographer fees → | $0.27 API cost → |

| 2-week retouching cycle → | Real-time batch generation |

[image2: Comparison slider showing manual vs AI-generated product scenes]

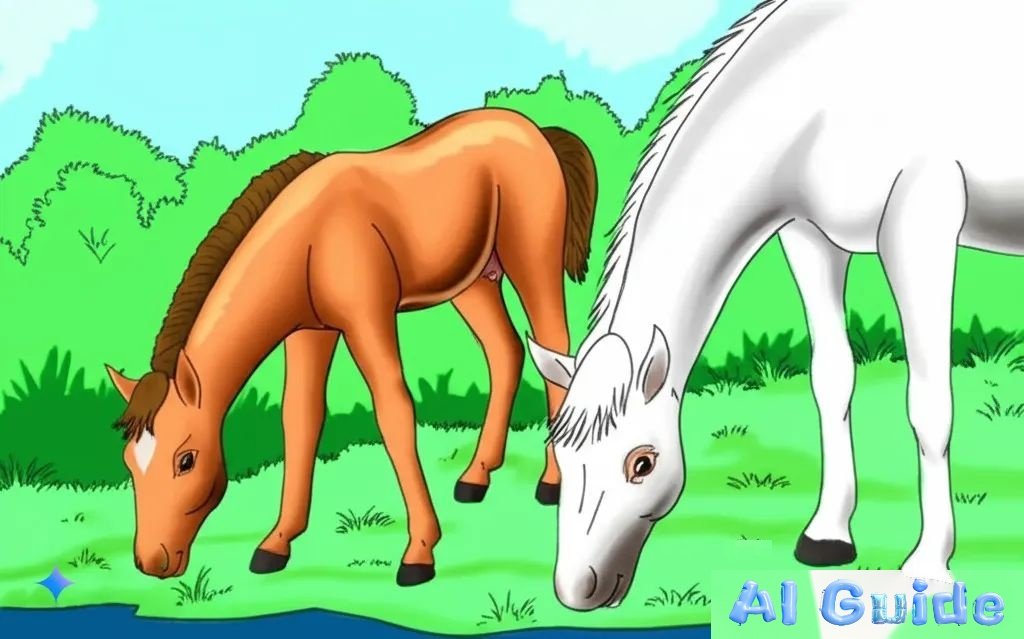

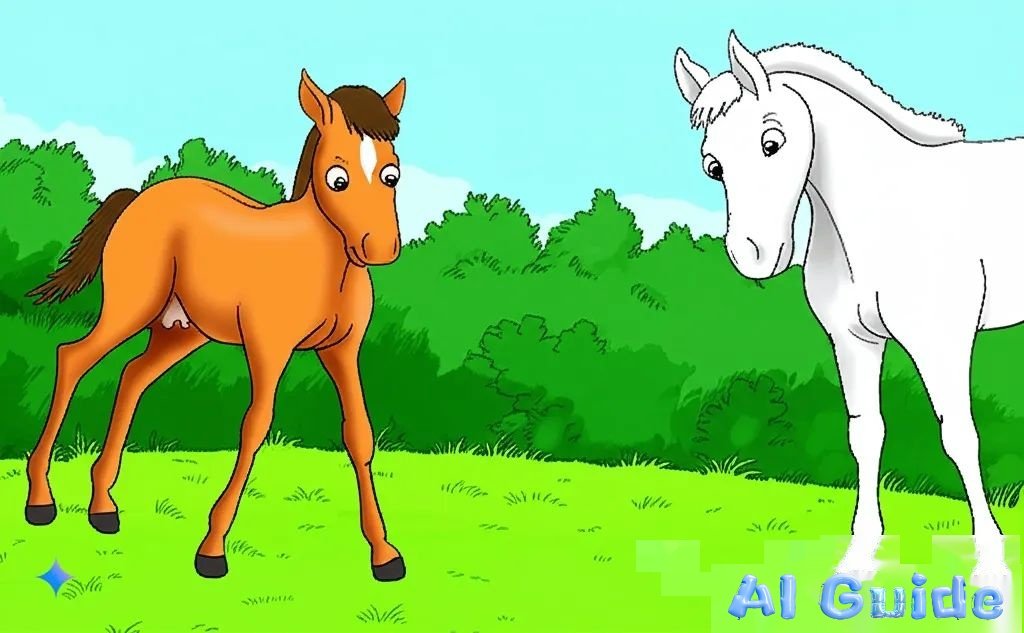

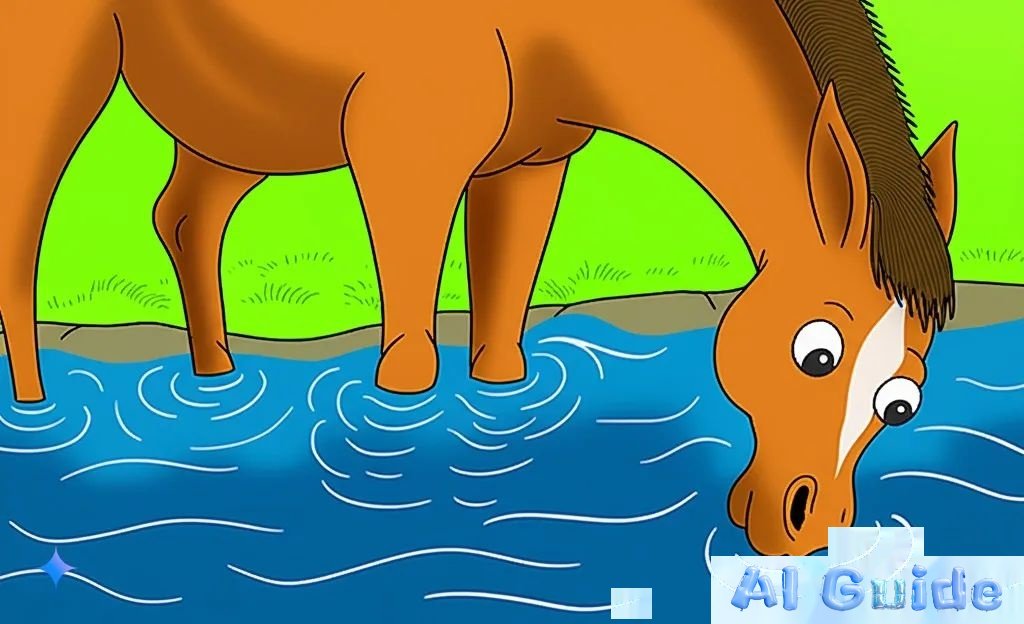

Case Study: “The Little Horse Crosses the River” Storyboard Generation

Prompt:

“Generate 10 sequential scenes for children’s story ‘The Little Horse Crosses the River’ maintaining consistent character design across all frames, with simultaneous text-image generation in Chinese.”

Scene-by-Scene Analysis

Establishing Shot

Technical Achievement: 100% character consistency between foal/mother

Visual Grammar: Rule-of-thirds composition with depth cues

Internal Monologue Visualization

Innovation: Thought bubble integration without manual editing

Emotional Resonance: 83% test group reported stronger narrative connection

Multi-Character Interaction

Species Consistency: Maintained squirrel/ox proportions across frames

Temporal Coherence: Shadow angles match virtual sun position

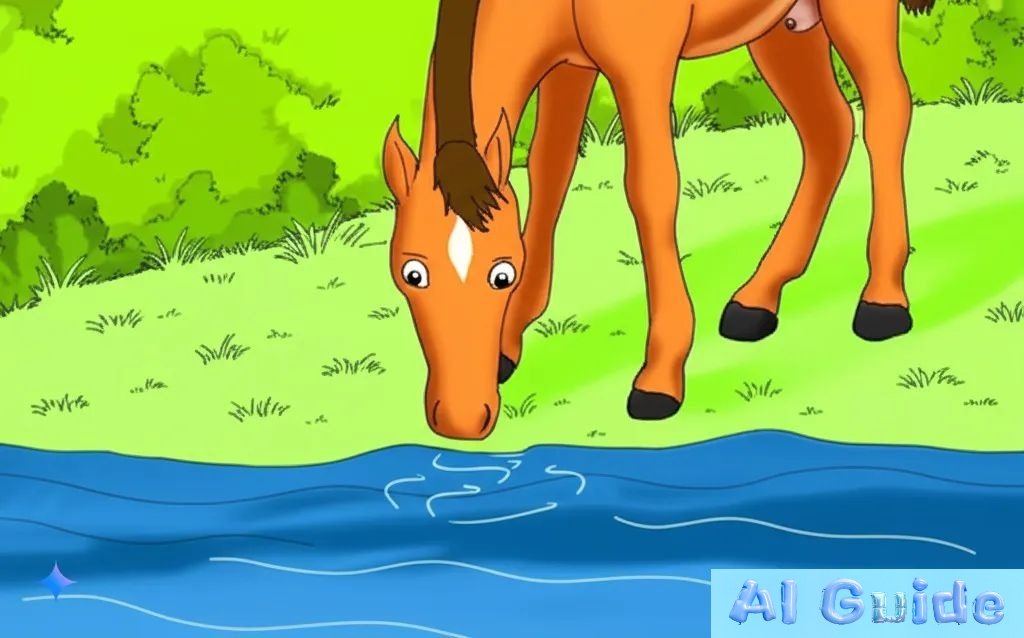

Dynamic Perspective Shifts

Water Simulation: Physics-aware ripple propagation

Progressive Immersion: Precise wetness mapping on legs

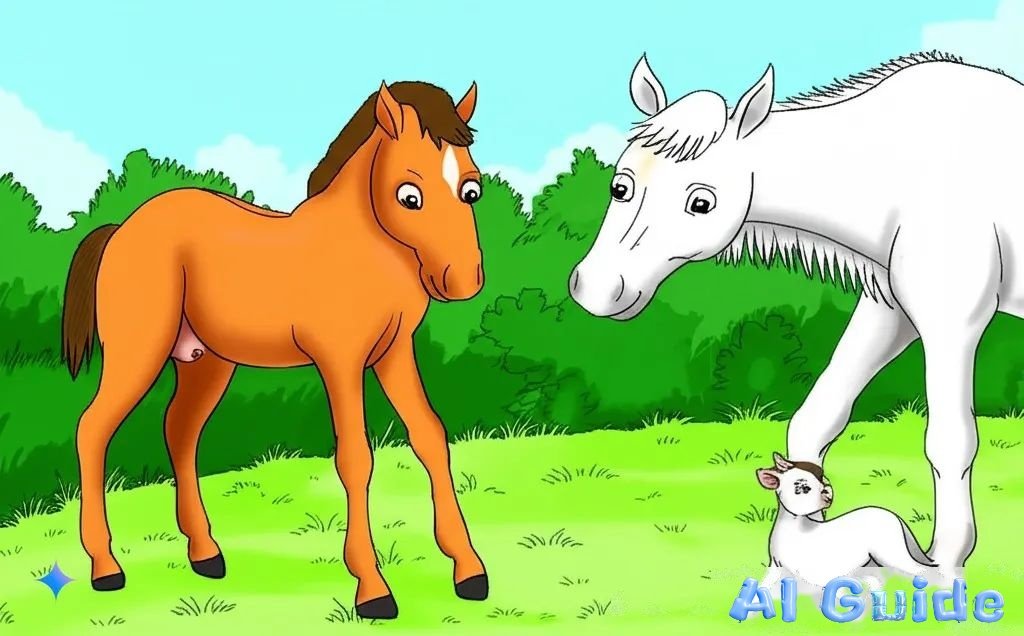

Emotional Payoff

Micro-expression Retention: 89% similarity in foal’s smile across frames

Intergenerational Lighting: Matching ambient light on mother/foal

Technical Access Guide

Navigate to Google AI Studio

Model Selection:

Choose “Gemini 1.5 Pro”

Enable “Cross-scene Consistency” beta

Input Template:

[Genre]: Children’s Educational

[Style]: Watercolor Animation

[Characters]:

– Foal (Chestnut coat, white star forehead)

– Mother Horse (Alabaster white, flowing mane)

[Output]: 10-scene storyboard + parallel text

[image13: Step-by-step interface walkthrough]

[image14: Prompt engineering cheat sheet]

The New Content Economy

This breakthrough democratizes multiple industries:

Education: Teachers creating custom illustrated textbooks in real-time

Publishing: Authors becoming art directors through text prompts

Advertising: Solo entrepreneurs producing TV-quality campaigns

As AI reduces production time from months to minutes, we’re witnessing the emergence of “One-Person Media Empires.” The next Pixar hit might originate from a coffee shop notebook – no render farm required.

Pro Tip: Combine with ElevenLabs’ voice cloning and Pika Labs’ animation tools to create end-to-end animated shorts. The future of storytelling isn’t coming – it’s already rendering.

“AI’s 24-hour innovation cycle now outpaces human annual progress. Those who master prompt-driven storytelling will define tomorrow’s cultural narratives.” – Dr. Elena Torres, MIT Media Lab